I’m excited to announce that this is the first post of the Engineering Innovation Newsletter in partnership with Good Science Project. Good Science Project is a new organization dedicated to improving the funding and practice of science. The founder and Executive Director, Stuart Buck, has many years of high-level experience in the space and I’m excited to be working with him and the rest of the organization’s fantastic board, advisors, and funders. As a Fellow with Good Science Project, I’ll continue writing pieces with the same goal: providing evidence-based, historically and statistically informed, arguments on how to build a more effective future of innovation.

Enjoy and please subscribe if you’d like to see more posts from me and the Good Science Project! Another link to this piece along with an executive summary can be found here on the Good Science Project site.

Predictions of future economic progress from our current vantage point place major emphasis on continuing advances made possible by medical research, including the decoding of the human genome and research using stem cells. It is often assumed that medical advances have moved at a faster pace since the invention of the antibiotics in the 1930s and 1940s, the development from the 1970s of techniques of radiation and chemotherapy to fight cancer, and the advent of electronic devices such as the CT and MRI scans to improve diagnoses of many diseases. Many readers will be surprised to learn that the annual rate of improvement in life expectancy was twice as fast in the first half of the twentieth century as in the last half. — Robert Gordon, The Rise and Fall of American Growth

No other documented period in American history even comes close. In this piece, I’ll go over what caused this massive decline in mortality rate and what we should have learned from this period — but didn’t.

One major cause of death almost disappeared

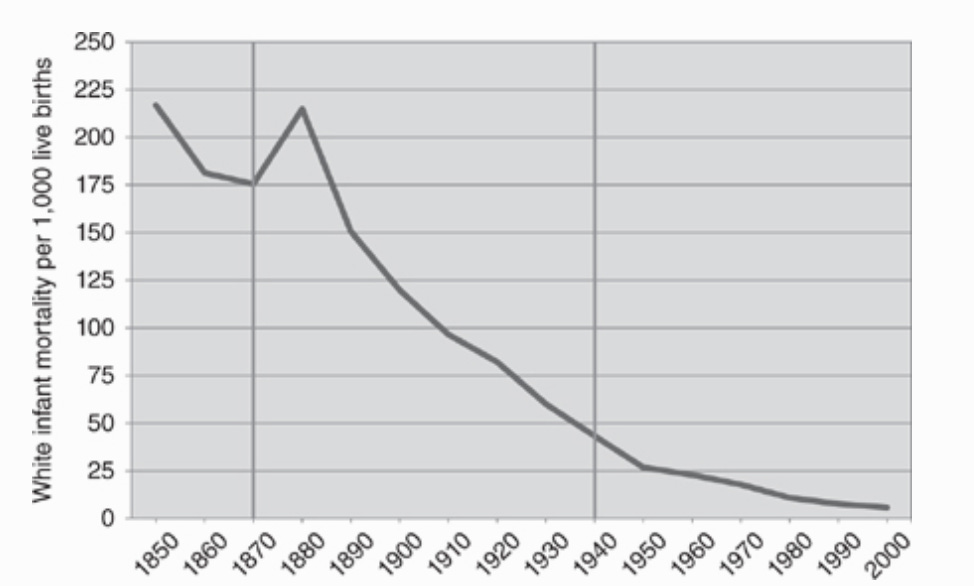

This effect is well-explored in Robert Gordon’s book, The Rise and Fall of American Growth. Before around 1880, there was little to no improvement in American mortality rates or life expectancy. In 1879, male and female life expectancy at age 20 was similar to their counterparts in 1750. Infant mortality rates, around 215 per 1,000 live births in 1880, were similar to infant mortality rates dating as far back as Tudor England.

But, suddenly, things began to improve. For example, giving birth became much safer. In the figure below, for every 1,000 births, the number of lives saved by improvements from 1890 to 1950 was 188, while the number saved from 1950 to 2010 was just 21. Gordon plots this steep decline.

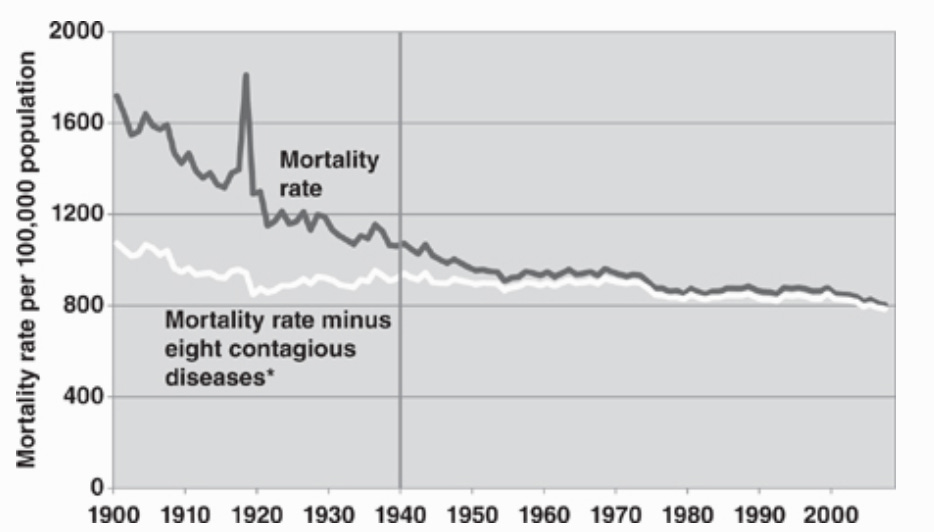

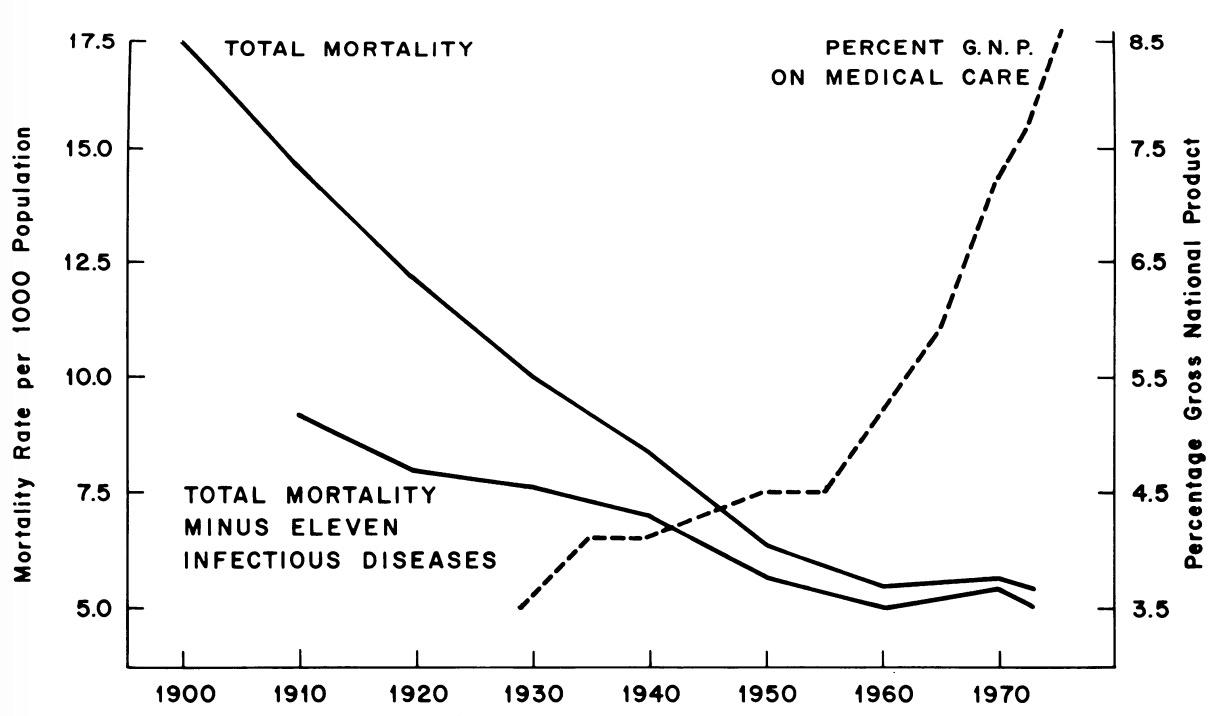

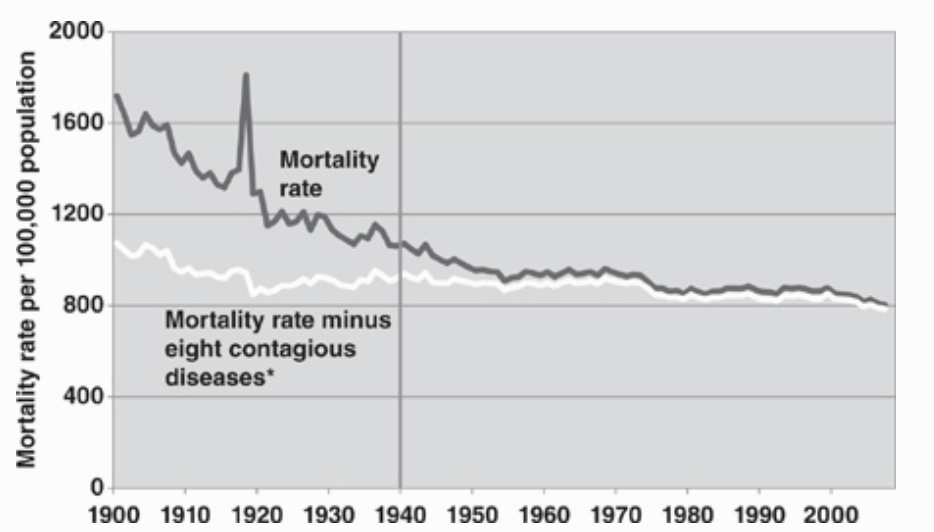

This reduction in infant mortality was primarily due to the reduction in infectious disease deaths. But infectious diseases were a problem for all. Gordon goes on to show a graph that demonstrates just how much of the massive improvement in mortality rates was due to reductions in deaths due to contagious diseases.

This trouncing of the infectious disease problem made society’s most dangerous medical problem almost obsolete within half a century. In 1900, 37% of deaths were caused by infectious diseases. By 1955, the number was down to less than 5%. Today, it’s down to around 2%.

What did NOT lead to this victory over infectious diseases

In the early 1970s, it was becoming clear from the mortality statistics that we were not seeing the same rate of progress that we’d grown accustomed to in recent decades.

John and Sonja McKinlay’s outstanding paper, “The Questionable Contribution of Medical Measures to the Decline of Mortality in the United States in the Twentieth Century”, attacked the common belief that the introduction of more modern medical measures such as vaccines and the expansion of the modern hospital were largely responsible for this mortality decline.

They lay out their initial reasoning:

If a disease X is disappearing primarily because of the presence of a particular intervention or service Y, then clearly Y should be left intact, or, more preferably, be expanded. Its demonstrable contribution justifies its presence. But, if it can be shown convincingly, and on commonly accepted grounds, that the major part of the decline in mortality is unrelated to medical care activities, then some commitment to social change and a reordering of priorities may ensue. For, if the disappearance of X is largely unrelated to the presence of Y, or even occurs in the absence of Y, then clearly the expansion and even the continuance of Y can be reasonably questioned. Its demonstrable ineffectiveness justifies some reappraisal of its significance and the wisdom of expanding it in its existing form.

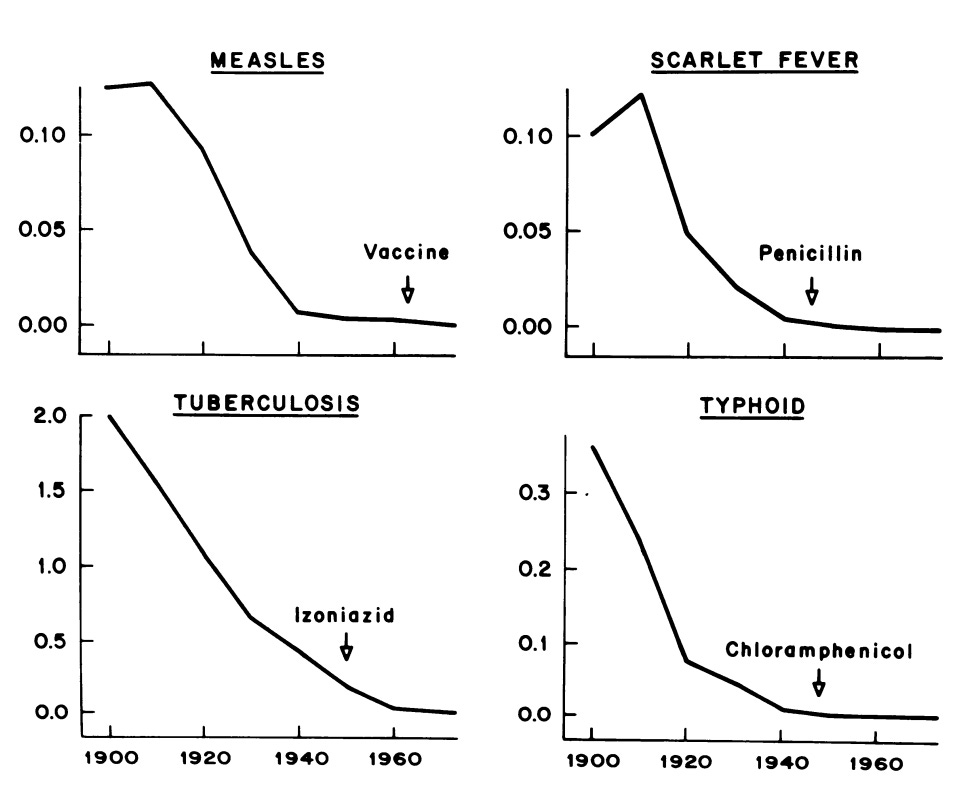

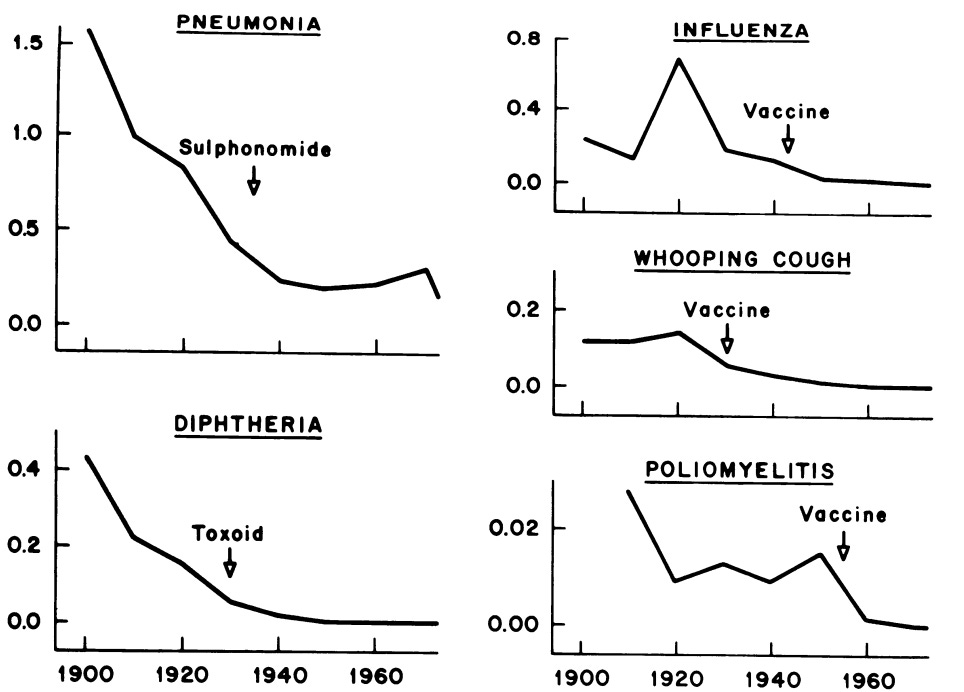

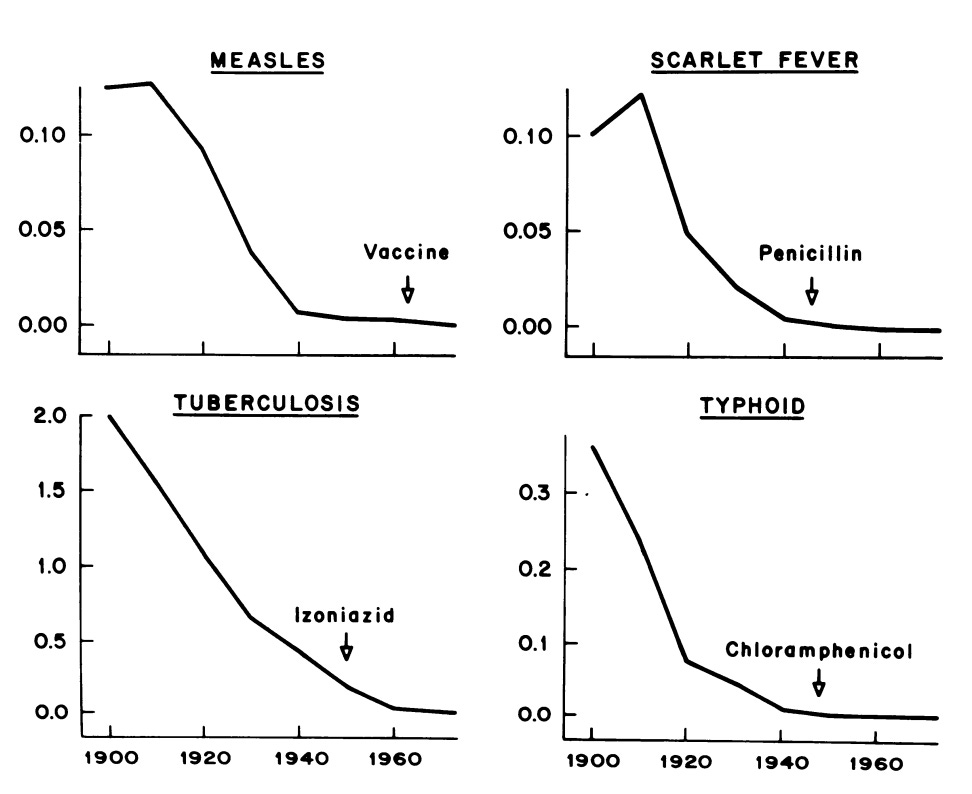

Building on this line of logic, they go on to show a series of graphs that should put to bed any reasonable hypothesis that the modern vaccines and medicines for these contagious diseases were the primary mechanisms that saved lives. The graphs below—with their 1970s visual simplicity— are extremely effective communicators of the authors’ point. The line in each graph represents the decreasing mortality due to that disease. And, above each line, there is an arrow showing when a formal “medicine” for that disease became common.

One can argue that these medicines and vaccines were a second, useful measure in ensuring that these contagious disease rates continued to decrease longer than they may have otherwise. But it seems clear that the battle was largely won long before these medicines came along.

The authors go on to estimate that 3.5% probably represents “a reasonable upper-limit” of the total fall in the death rate that could be explained due to the medical interventions in the major infectious disease areas. And, while surely one could dispute whether the true effect was something slightly higher, the effect cannot have been substantial since so much of the work was already done by the time the treatments came around. Something that looks much less like ‘modern medical science’ had solved the problem already.

Also, it should be noted that this reduction almost entirely preceded the modern phenomenon of skyrocketing American spending on healthcare that brought advanced therapies and medicines through the hospital system.

The McKinlays show this in the graph below.

This graph ends in about 1972, but the increase in US medical spending did not. The percent of GDP spent on medical care today is around 20%.

If not medicine, then what was it?

The McKinlays work went a long way in establishing that the main cause of this improvement in mortality rates was not what people seemed to think it was: the modern medical system. Later work would go further in identifying the causes of these trends. And, based on that literature, it seems like many changes affecting public health from the early 1900s played a role. These include: the expanded use of window screens to keep disease-carrying bugs out of the home, the establishment of the FDA, horses being phased out by the internal combustion engine which left streets much more sanitary, and more.

But, based on the work of Grant Miller and David Cutler, there seems to be a single intervention that might have been almost as important as all the others combined in bringing about change: cleaner water sources. The implementation of these improved water management systems might be the most impressive work ever carried out by this country’s city governments.

The introduction of these systems directly coincided with the drop-off in mortality due to infectious diseases. The percentage of urban households, who were particularly afflicted by the infectious disease problem, with filtered water in the US was: only .3% in 1880, 6.3% in 1900, 25% in 1910, 42% in 1925, and 93% by 1940.

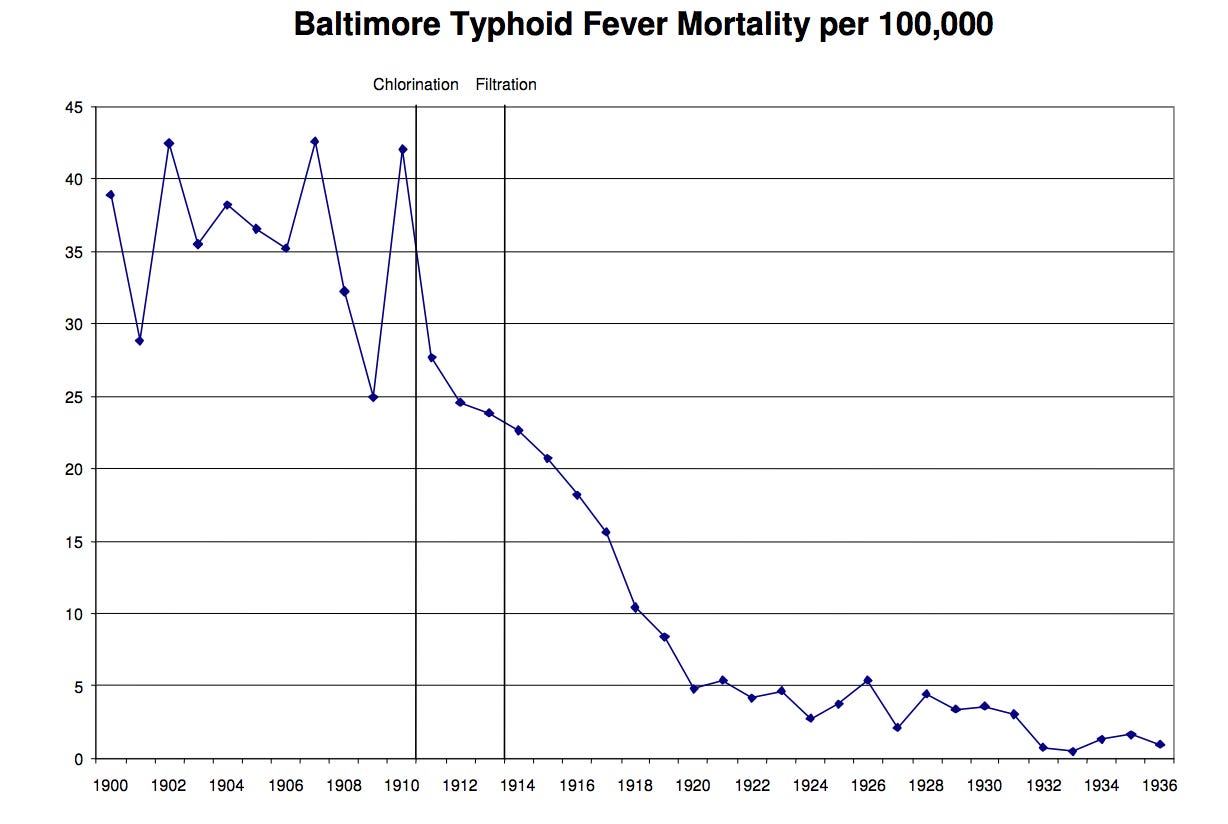

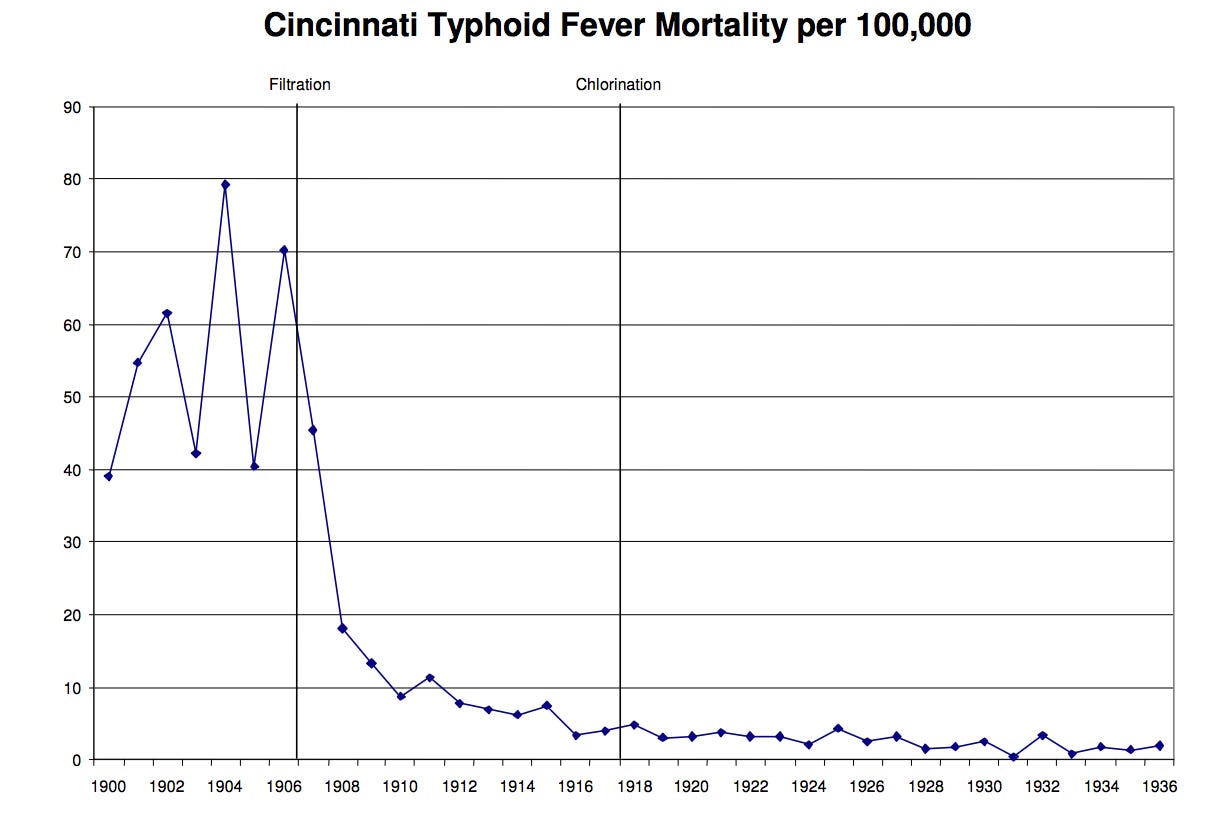

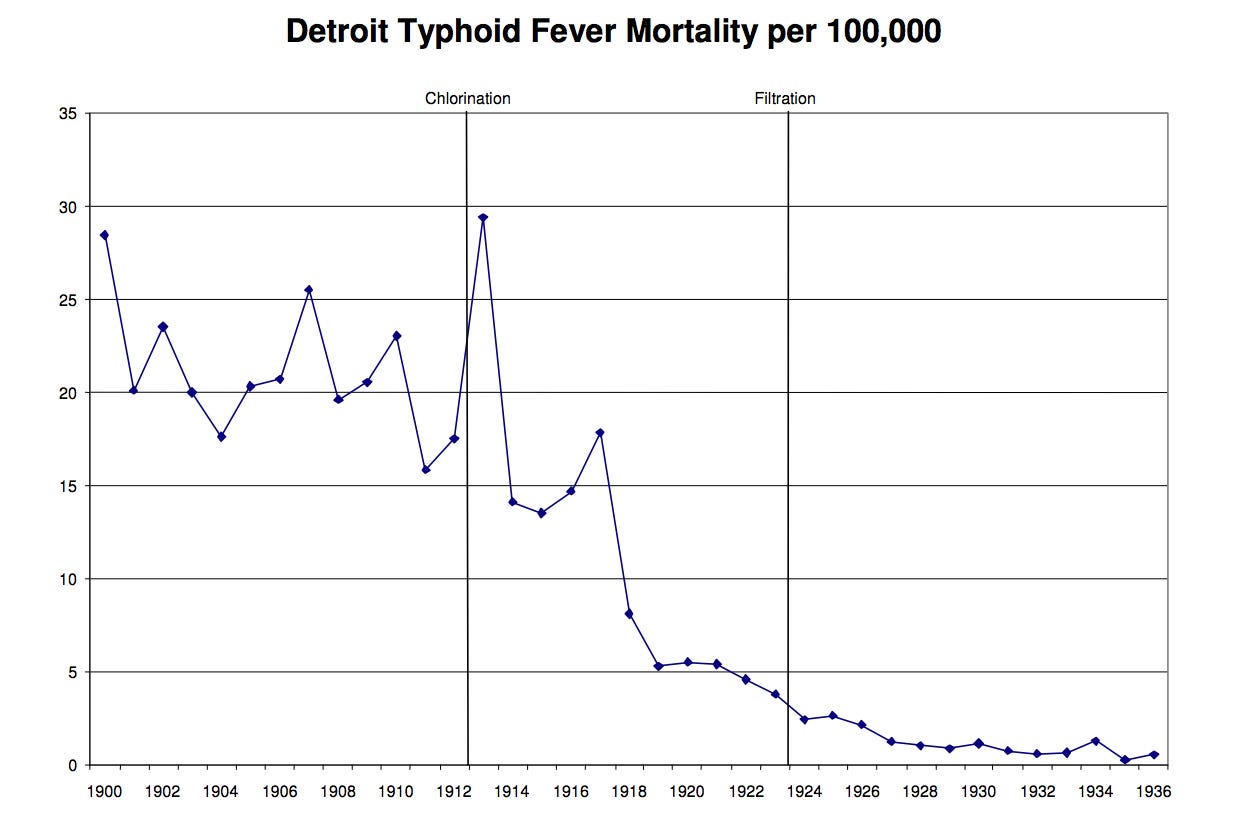

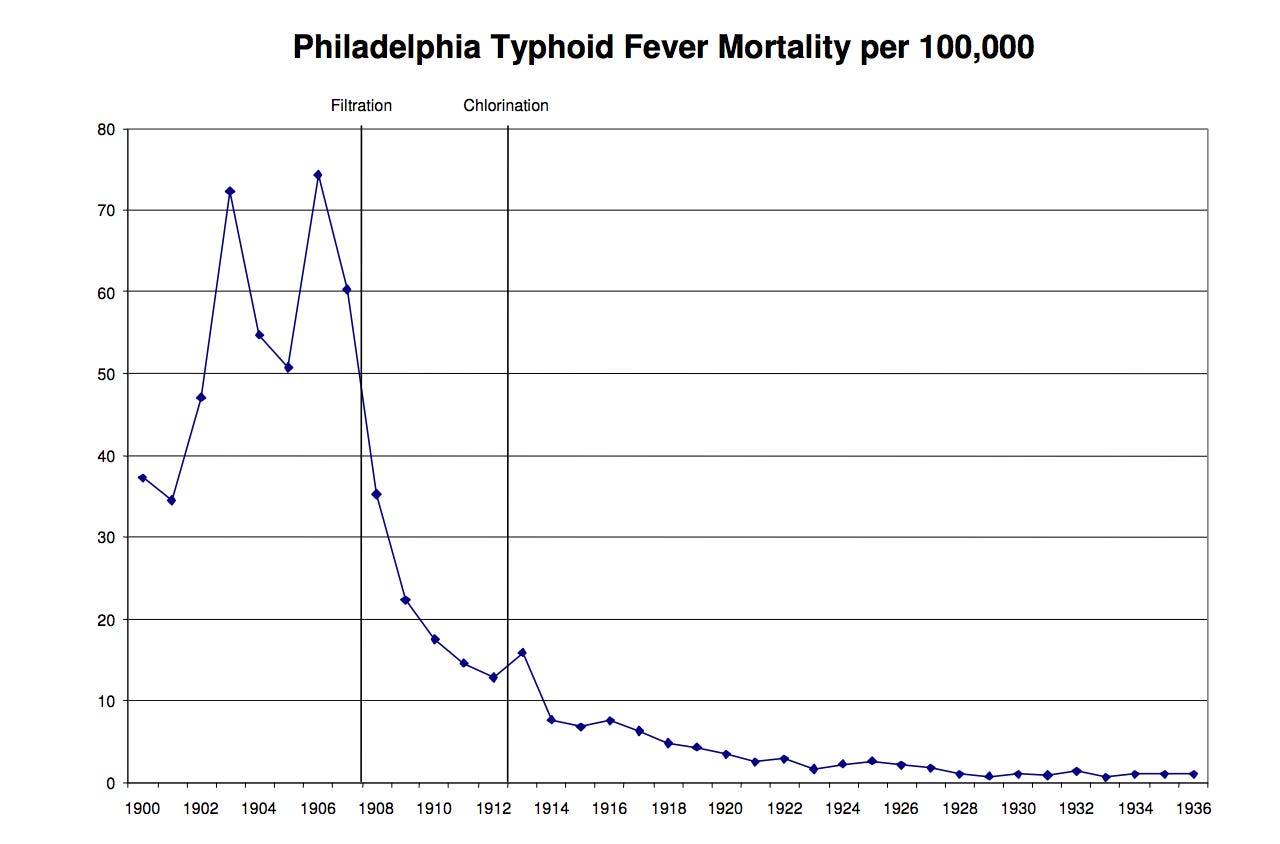

Cutler and Miller went on to identify all of the major cities with clear records of when water chlorination and filtration technologies became widely available. The precise year in which these technologies were built in each city was quite arbitrary because they were the result of often decades-long partisan bickering, planning delays, etc. For these 13 cities, the authors made a graph for each showing the mortality rate of typhoid fever and when the city-provided chlorination or filtration systems began reaching the majority of individuals. (as a note: these technologies are substitutes to some extent)

All 13 graphs, more or less, generally look like the following, with major drops in mortality immediately following the implementation of a clean water system.

Some of the reductions seem to begin immediately before the vertical lines, which is to be expected, because the authors drew the line to coincide not with the first year a clean-water system was implemented but, instead, the year in which it finally reached the majority of citizens.

The authors then go on to use much more robust statistical methods to estimate that clean water and filtration systems explained half of the overall reduction in mortality in the early 1900s, 75% of the decline in infant mortality, and 67% of the decline in child mortality.

And this was all comparatively cheap too. It was estimated that this whole process cost roughly $500 per life-year saved. And that’s if you assume that cities were only doing this to save lives, which was not at all the case.

The city governments did not fully understand the potential impact of these water systems prior to building them

The germ theory of disease was beginning to become more widespread, but that should not be mistaken as the primary reason this water infrastructure was undertaken when it was. As Miller and Cutler point out in a different 2004 piece:

Dirty water was believed to be causally linked to disease long before the bacteriological revolution. The first demonstration of the link between unclean water and disease was John Snow’s famous demonstration of how cholera spread from a single water pump in London in the 1850s. Snow had premonitions of the germ theory, but it took several more decades for the theory to be fully articulated.

The prevailing theory at the time, the miasma theory of disease, held that a variety of illnesses are the result of poisonous, malevolent vapors (“miasmas”) that are offensive to the smell.

The general knowledge that dirty water was likely connected to the spread of some diseases and, to some extent, could be mitigated was not new. Since ancient times, people have understood that keeping feces away from food and water was good for health. What was new, as Cutler and Miller point out, was the ability to more easily raise bonds to build infrastructure like these water systems at the turn of the century. So they built!

And, even with the germ theory of disease technically in existence, it seems clear that people were quite confused. For example, a noteworthy event that encouraged urban populations to push to adopt clean water systems was the Memphis yellow fever epidemic of 1878 which killed 10% of the city’s population. Many thought it was due to contaminated water and, naturally, this led individuals in other cities to be more eager to adopt new water infrastructure. But the Memphis yellow fever epidemic was not caused by contaminated water at all. It turns out that the Memphis epidemic was caused by mosquitos.

And, even though it was understood that cleaner water would save some number of lives from some diseases somehow, it’s clear that this need was considered a bit amorphous. The need didn’t seem as tangible in a city government committee as, say, the need for water to put out fires. In a 1940 text on public water supplies written for use by instructors in technical schools, Turneaure and Russell write:

Too often a [water] supply for a village is designed with almost exclusive reference to fire protection, and little attention is paid to the quality of the water, the people expecting to depend on wells as before.

Even as late as 1940, the priority of a water supply for many, particularly in smaller towns, was to put out fires. This is not because they irrationally feared dying in a fire far more than dying from an infectious disease. Rather, it was widely understood that these public water supplies reduced insurance rates by orders of magnitude. It was a bargain!

It was much easier to count dollars saved than hypothetical added life years. To this point, Turneaure and Russell’s text even went on to note that a clean water supply could enhance property values. Writing their text for the nation’s future engineers of these water systems, they understood that these were the data points that might sway a frugal city government to put these measures into action.

Progress can be made without understanding all of the scientific mechanisms of a disease

All things equal, of course, it is better to understand everything about how a disease works before attempting to fight it. But the war on infectious diseases shows that this is not always a necessary step. With a somewhat hazy understanding of the science, cities across the country helped engineer one of the greatest public health interventions ever.

We should be doing everything we can to achieve reductions in the mortality rate anywhere near those of the early 1900s. Compared to what happened before 1955, the progress in the decades since feels almost minuscule.

And that is not because we ran out of problems to solve. While infectious disease deaths were greatly reduced, total deaths in other disease areas often remained relatively similar or worsened. In the same period when infectious diseases fell from 37% of total deaths to less than 5%, other diseases became our new ‘major problems’. Deaths due to heart disease, cancer, and strokes grew from 7% of total deaths to 60% of total deaths. Fewer people were dying, but we still had major problems left to solve.

One area of particular emphasis has been the ‘War on Cancer.’ The vast majority of the tens of billions spent on the War on Cancer over the past 50 years have been mostly targeted at medical interventions and therapies. And, while this work has made some progress, many are quite disappointed with how little we’ve accomplished in that time. And, for those people who are not satisfied with the current rate of progress on the cancer problem, the history of how we tackled infectious diseases should serve as an inspiration! Just look at the chart below. There are ways to fight diseases outside of the traditional university lab or the hospital.

There might be equivalent public health measures that could help us make headway in eliminating current medical problems. Let’s take environmental cancer-causing agents as one example. Many in the cancer research and public health community have argued that the government should put more serious resources into the fight against environmental causes of cancer—albeit with limited success.

The war on infectious diseases might have a lot to say about how we could effectively pursue this goal. Understanding the environmental aspects of what causes cancer is not such a different problem from the infectious disease problem in the early 1900s.

Similar to how many felt about infectious diseases at the turn of the century:

It feels like carcinogens are everywhere and are almost an inevitable presence in our lives.

Sometimes when you come in contact with a (most likely) carcinogenic material you get cancer, but many times you don’t.

It’s very hard to disentangle which of the many materials that make up a cancer-causing entity are the problem and which are just correlated with the problem.

Even if we only have a middling level of scientific understanding of the disease, why can’t we hope to halt these deaths from cancer due to environmental reasons?

As with all novel lines of research, there are no guarantees this would work. Obviously, these are not the exact same problems on a micro-level, but given the structural similarities, it seems that a solution that worked well on one might lend some insight to the other. We know that environmental risk factors are a major contributor to the disease. A paper in Nature by Wu et. al estimates that up to 90% of the risk factors in cancer development are due to extrinsic factors. And basic cancer researchers are increasingly embracing top-down, organicism approaches to viewing the problem as opposed to the bottom-up, reductionist, cell-focused approaches that were once more common than they are now.

With 1.75 million new cancer cases in the US every year along with 600,000 deaths, our high spending levels researching the problem are understandable. With even a modest fraction of the brainpower and resources that have gone into trying to cure cancer through the hospital, we could potentially make great strides in isolating carcinogen-creating materials and locations with a much higher level of certainty. The whole process could be inspired by the methodology of those who have been studying environmental risk factors of cancer for decades (pieces like this delve into many specific studies), but on a much larger and more thorough scale.

The government resources in this larger-scale effort could be used to compile relevant public records and datasets into one place, employ health researchers to identify strategies for flagging possibly carcinogenic materials and areas, paying for exploratory medical screening to be leveraged for further research when something is flagged as carcinogenic, and rapidly dispatching field researchers to follow up on any leads to obtain more information on the ground.

The federal government and the medical research ecosystem currently attempt efforts like this, but the conclusions are limited by lack of funds, lack of data, and limited populations to sample from. With all of these efforts scaled up and coordinated, we could possibly drastically reduce the effects of environmental cancer-causing agents. This would be expensive, but this is very possible given the resources we spend on cancer—if there’s political will.

It is also important to remember that, for legal reasons, the burden of proof required to regulate something out of existence may be more stringent than the burden of proof required to convince a scientist that something is likely carcinogenic. So, while we’d hope this proposed process would discover countless new carcinogens which we could eradicate, you could imagine the process paying for itself solely by increasing the number of known carcinogens for which we are able to compile additional evidence and eradicate through the legal/regulatory process.

My advice for those who are interested in setting a program like this in motion:

Advice for Public Sector: More of the ~$40 billion NIH budget deserves to go to explorations inspired by the war on infectious diseases that was so decisively won. The interventions employed there were responsible for the biggest mortality rate improvement in American history. There are likely more massive wins that can follow from learning from this playbook.

Advice for Philanthropists and New Science Funders: Exploratory, proof-of-concept research projects in this area could be an extremely high-leverage use of money. The government would likely be best suited to take over the process at some point, but they might not be optimally suited to undertake the proof-of-concept stage. If one of these funders could prove this to be a ‘winner’ and give a sense of the costs it would entail, the government might become quite interested in investing and scaling the operation.

We won the war on infectious diseases. Why can’t we do it again?

Very interesting, thanks! I covered some similar ground in my essay “Draining the swamp;” I think you would enjoy the references in that one. Some thoughts:

Did “medicine” cause the mortality decline?

There is a lot of truth in the McKinlay paper. In particular, it's true that sanitation efforts probably did more than anything else to improve mortality in the 20th century. I think this is for two reasons: (1) Those techniques are less technologically advanced, and so they arrived first; vaccines and antibiotics that arrived later only get credit for mopping up what was left. (2) Prevention is more effective than cure.

But I think you have to be careful with how you interpret the data. It would be a little too easy to conclude that only certain techniques really mattered and others were not so important, and I think this is the wrong way to look at it.

One key thing to understand is that each disease spreads in its own way. Some are water-borne, some spread via insects or food, etc. This means that a given technique for prevention will work against some diseases and not others. No one technique works against all diseases; we need a collection of techniques.

For instance, you point to the amazing effectiveness of water sanitation. This is correct—against water-borne diseases such as typhoid fever or cholera. But water sanitation doesn't help with malaria, or for that matter with covid.

Were vaccines relevant to mortality improvements?

This is tricky. First, there is the point I made above that vaccines often came after some simpler technique. But also, two of the biggest successes of vaccines are smallpox and polio.

More here.

Comments on specific charts from McKinlay

Did we need the germ theory?

My reading of the history is that the germ theory was somewhat more important than you make it out to be in this piece.

On water sanitation, it's true that these efforts began pre-germ theory, based on aesthetics and vague associations between filth/smell and disease. However, these efforts could only go so far unguided by theory. And sometimes they backfired: guided by the miasma theory, Chadwick built sewers that dumped tons of filthy water into the Thames, actually polluting the drinking water (see The Ghost Map). The germ theory helped set targets for water sanitation: they could actually count bacteria in water samples under the microscope. And I don't think chlorination would have been introduced without germ theory. (Not checking references on this paragraph so I might be getting a few things wrong though)

Pest control is a similar example. Many efforts were begun as early as 18th or even 17th c., but, for instance, malaria and yellow fever were not eliminated until after the exact species of mosquito was discovered for each and its lifecycle and breeding habits carefully studied. Needed science for that one.

Food sanitation also was motivated by an understanding of germ theory. That's part of how we got the FDA, etc.

Overall, some important progress on mortality was made pre–germ theory, but a lot (I think most) was made after, and I think there's a reason for that.

I think the war on cancer will be the same. There is some progress we can make, and have already made, without a fundamental understanding of the disease, and of course we should go ahead and do that. But that will plateau at some point without a breakthrough in basic research. After such a breakthrough I would expect progress to accelerate dramatically.

Your point on the proper, theory-led goals of water sanitation is interesting. I think there's maybe a decent way for us to figure this out.

The copy of Turneaure and Russel's water sanitation textbook I used was from around 1940. But the first edition of that was from 1901. If we could find some analogous top-tier sources utilized just before some insights from germ theory we could probably figure out how much of the best-practices in planning changed from before/after the pervasion of the theory.

Do you think that would be fair or did I miss something? Because I'd believe you very well might be right. What I wrote was a fair representation of my sources but this is an area where I am very aware that my sources are few. So I don't hold these beliefs nearly as confidently as my views related to something like physics in the early 1900s where my reading has been far more exhaustive.

That would be an interesting mini-research project. Also one of us could check my references here and see what I was relying on when I made those statements…

Hey everyone! I was really excited to share this piece and get everyone's thoughts on the general area, possible extensions, caveats I didn't think of, etc.

Life sciences is not my particular area of expertise, so I was particularly excited to see what everyone thinks about all of this/if you know interesting books and work I can look in to to learn more.

Fascinating! The more I study medicine and medical history the more disappointed I am with the field. Do you know of any good critiques of the McKinlays' paper?

I think the general thesis here, that most of the mortality improvements were from sanitation/hygiene rather than from pharma or even vaccines, is fairly well-accepted. But see my comments above for how to interpret this—I don't think there's any reason to be disappointed with the medical field.

I don't but I'm sure they could exist. My expertise with sources in this area is not as in depth as economics or physics history. The reason I was happy to go about publishing is because Grant Miller is known in econ world to be quite expert/careful and good at what he does. So I did have a certain faith that if in the three decades between Mckinlays writing and their papers he'd have rooted out and addressed the primary counterarguments in their two part research.

And of course I'm always open-minded to update my views as things come out now as well