This is the written version of a talk presented to the Santa Fe Institute at a working group on “Accelerating Science.”

We’re here to discuss “accelerating science.” I like to start on topics like this by taking the historical view: When (if ever) has science accelerated in the past? Is it still accelerating now? And what can we learn from that?

I’ll submit that science, and more generally human knowledge, has been accelerating, for basically all of human history. I can’t prove this yet (and I’m only about 90% sure of it myself), but let me appeal to your intuition:

- Behaviorally modern humans are over 50,000 years old

- Writing is only about 5,000 years old, so for more than 90% of the human timeline, we could only accumulate as much knowledge as could fit in an oral tradition

- In the ancient and medieval world, we had only a handful of sciences: astronomy, geometry, some number theory, some optics, some anatomy

- In the centuries after the Scientific Revolution (roughly 1500s–1700s), we got the heliocentric theory, the laws of motion, the theory of gravitation, the beginnings of chemistry, the discovery of the cell, better theories of optics

- In the 1800s, things really got going, and we got electromagnetism, the atomic theory, the theory of evolution, the germ theory

- In the 1900s things continued strong, with nuclear physics, quantum physics, relativity, molecular biology, and genetics

I’m leaving aside the question of whether science has slowed down since ~1950 or so, which I don’t have a strong opinion on. Even if it has, that’s mostly a minor, recent blip in the overall pattern of acceleration across the broad sweep of history. (Or, you know, the beginning of a historically unprecedented reversal and decline. One or the other.)

Part of the reason I’m pretty convinced of this accelerating pattern is that it’s not just science that is accelerating: pretty much all measures of human advancement show the same trend, including world GDP and world population.

What drives acceleration in science? Many factors, including:

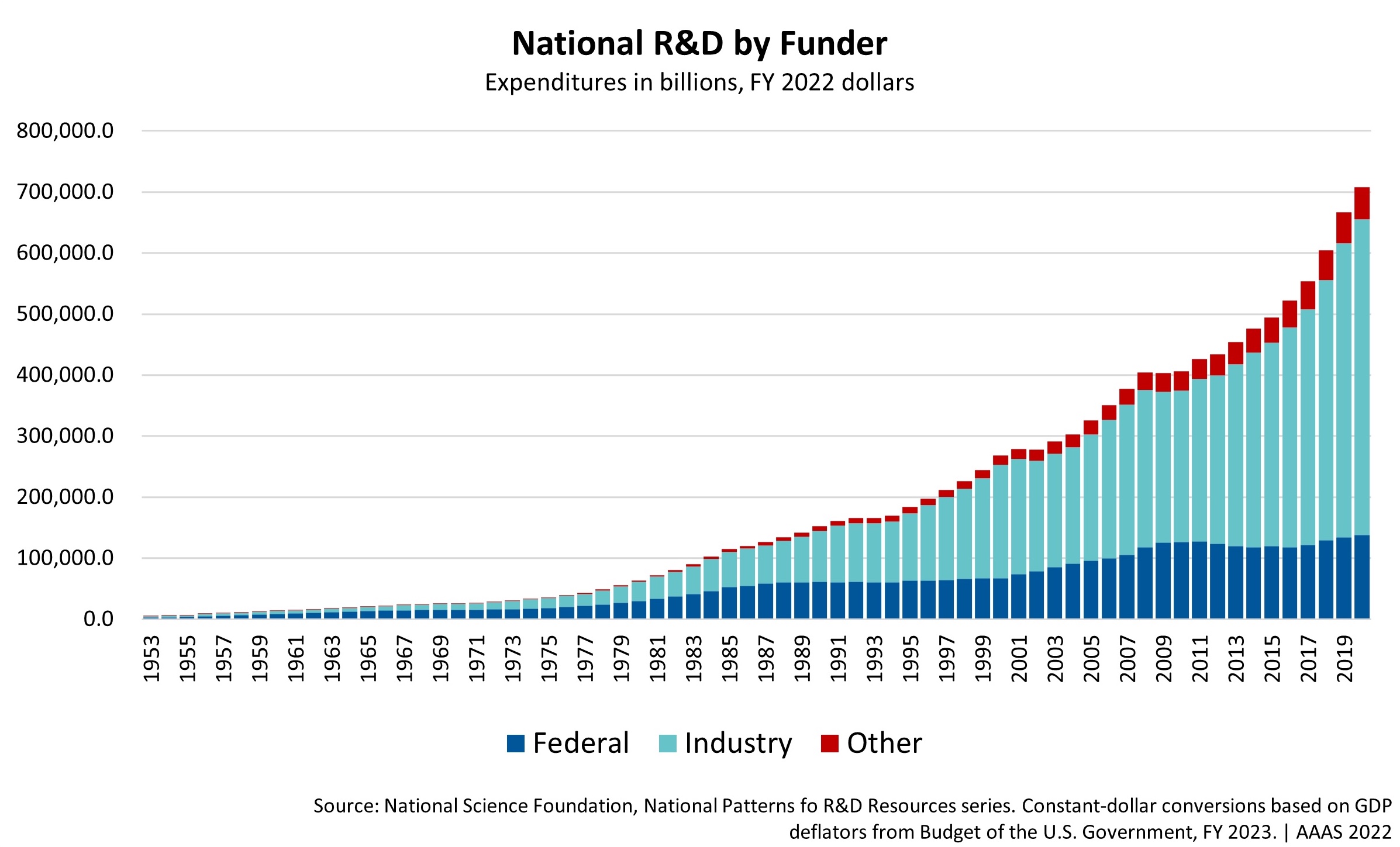

- Funding. Once, scientists had to seek patronage, or be independently wealthy. Now there is grant money available, and the total amount of funding has increased significantly in the last several decades:

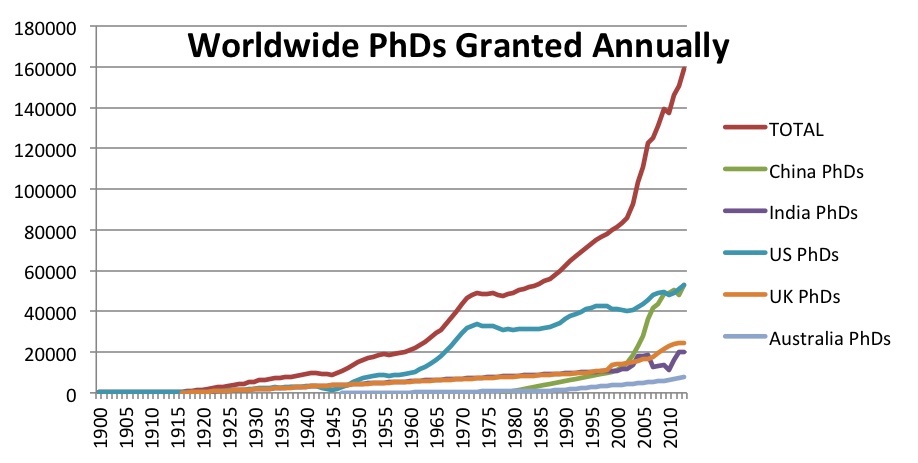

- People. More scientists (all else being equal) means science moves faster, and the number of scientists has grown dramatically, both because of overall population growth and because of a greater portion of the workforce going into research. In Science Since Babylon, Derek J. de Solla Price suggested that “Some 80 to 90 percent of all scientists that have ever been, are alive now,” and this is probably still true:

- Instruments. Better tools means we can do more and better science. Galileo had a simple telescope; now we have JWST and LIGO.

- Computation. More computing power means more and better ways to process data.

- Communication. The faster and better that ideas can spread, the more efficient and effective scientific communication can be. The scientific journal was invented only after the printing press; the Internet enabled preprint servers such as arXiv.

- Method. Better methods make for better science, from Baconian empiricism to Koch’s postulates to the RCT (and really, all of statistics).

- Institutions. Laboratories, universities, journals, funding agencies, etc. all make up an ecosystem that enables modern science.

- Social status. The more science carries respect and prestige, the more people and money flow into it.

Now, if we want to ask whether science will continue to accelerate, we could think about which of these driving factors will continue to grow. I would suggest that:

- Funding for science will continue to grow as long as the world economy does

- Instruments, computation, and communication will continue to improve along with technology in general

- I see no reason why method should not continue to improve, as part of science itself

- The social status of science seems fairly strong: it is a respected and prestigious institution that receives some of society’s top honors

In the long run, we may run out of people to continue to grow the base of researchers, if world population levels off as it is projected to do, and that is a potential concern, but not my focus today.

The biggest red flag is with our institutions of science. Institutions affect all the other factors, especially the management of money and talent. And today, many in the metascience community have concerns about our institutions. Common criticisms include:

- Speed. It can easily take 12–18 months to get a grant (if you’re lucky)

- Overhead. Researchers typically spend 30–50% of their time on grants

- Patience. Researchers feel they need to show results regularly and can’t pursue a path that might take many years to get to an outcome

- Risk tolerance. Grant funding favors conservative, incremental proposals rather than bold, “high-risk, high-reward” programs (despite efforts to the contrary)

- Consensus. A field can converge on a hypothesis and prune alternate branches of study too quickly

- Researcher age. The trend over time is for grant money to go to older, more established researchers

- Freedom. Scientists lack the freedom to direct their research fully autonomously; grant funding has too many strings attached

Now, as a former tech founder, I can’t help but notice that most of these problems seem much alleviated in the world of for-profit VC funding. Raising VC money is relatively quick (typically a round comes together in a few months rather than a year or more). As a founder/CEO, I spent about 10–15% of my time fundraising, not 30–50%. VCs make bold bets, actively seek contrarian positions, and back young upstarts. They mostly give founders autonomy, perhaps taking a board seat for governance, and only firing the CEO for very bad performance. (The only concern listed above that startup founders might also complain about is patience: if your money runs out, you’d better have progress to show for it, or you’re going to have a bad time raising the next round.)

I don’t think the VC world does better on these points because VCs are smarter, wiser, or better people than science funders—they’re not. Rather, VCs:

- Compete for deals (and really don’t want to miss good deals)

- Succeed or fail in the long run based on the performance of their portfolio

- See those outcomes within a matter of ~5–10 years

In short, VCs are subject to evolutionary pressure. They can’t get stuck in obviously bad equilibria because if they do they will get out-competed and lose market power.

The proof of this is that VC has evolved over the decades—mostly in the direction of better treatment for founders. For instance, there has been a long-term trend towards higher valuations at earlier stages, which ultimately means lower dilution and a shift in power from VCs to founders: it used to be common for founders to give up half or more of their company in the first round of funding; last I checked that was more like 20% or less. VCs didn’t always fund young techies right out of college; there was a time when they tended to favor more experienced CEOs, perhaps with an MBA. They didn’t always support founder-led companies; once it was common for founders to get booted after the first few years and replaced with a professional CEO (when A16Z launched in 2009 they made a big deal out of how they were not going to do that).

So I think if we want to see our scientific institutions improve, we need to think about how they can evolve.

How evolvable are our scientific institutions? Not very. Most scientific organizations today are departments of university or government. Much as I respect universities and government, I think anyone would have to admit that they are some of our more slow-moving institutions. (Universities in particular are extremely resilient and resistant to change: Oxford and Cambridge, for instance, date from the Middle Ages and have survived the rise and fall of empires to reach the present day fairly intact.)

The challenges to the evolvability of scientific funding institutions are the inverse of what makes VC evolvable:

- They tend to lack competition, especially centralized federal agencies such as NIH and NSF

- They lack any real feedback loop in which a funder’s resources are determined somehow by past judgment and the success of their portfolio (Michael Nielsen has repeatedly pointed out that failures of funding from “Einstein did his best work as a patent clerk” to “Katalin Karikó was denied grants and tenure before she won the Nobel prize” don’t seem to even spark processes of reflection within the relevant institutions)

- They face long cycle times to learn the true impact of their work, which might not be apparent for 20–30 years

How might we improve evolvability of science funding? We should think about how we can improve these factors. I don’t have great ideas, but I’ll throw some half-baked ones out there to start the conversation:

How might we increase competition in science funding? We could increase the role of philanthropy. In the US, we could shift federal funding to the state level, creating fifty funders instead of one. (State agricultural experiment stations are a successful example of this, and competition among these stations was key to hybrid corn research, one of the biggest successes of 20th-century agricultural science.) At the international level, we could support more open immigration for scientists.

How might we create better feedback loops? This is tough because we need some way to measure outcomes. One way to do that would be to shift funding away from prospective grants and more towards a wide variety of retrospective prizes, at all levels. If this “economy” were sufficiently large and robust, these outcomes could be financialized in order to create a dynamic, competitive funding ecosystem, with the right level of risk-taking and patience, the right balance of seasoned veterans vs. young mavericks, etc. (Certificates of impact, such as hypercerts, could be part of this solution.)

How might we solve long feedback cycles? I don’t know. If we can’t shorten the cycles, maybe we need to lengthen the careers of funders, so they can at least learn from a few cycles—a potential benefit of longevity technology. Or, maybe we need a science funder that can learn extremely fast, can consume large amounts of historical information on research programs and their eventual outcomes, never forgets its experience, and never retires or dies—of course, I’m thinking of AI. There’s been a lot of talk of AI to support, augment, or replace scientific researchers themselves, but maybe the biggest opportunity for AI in science is on the funding and management side.

I doubt that grant-making institutions will shift themselves very far in this direction: they would have to voluntarily subject themselves to competition, enforce accountability, and admit mistakes, which is rare. (Just look at the institutions now taking credit for Karikó’s Nobel win when they did so little to support her.) If it’s hard for institutions to evolve, it’s even harder for them to meta-evolve.

But maybe the funders behind the funders, those who supply the budgets to the grant-makers, could begin to split up their funds among multiple institutions, to require performance metrics, or simply to shift to the retrospective model indicated above. That could supply the needed evolutionary pressure.

I love the work you're doing. I believe there are dysfunctions in the way curiosity-driven academic research gets funded that have and will continue to have major implications for technological progress and economic development. I'm also sympathetic to the sketch of possible reforms. Increasing competition and closing feedback loops on the performance of funding decisions would likely produce tangible benefits.

What I'm wondering about more these days is how things work at the micro level. In my experience, there is a very clear and observable difference between the kinds of researchers who are driven by curiosity and the kinds of researchers who are driven by a desire to see their work have a tangible impact on the world during their lifetime. VC funding has done plenty for the latter. How can we encourage and help the former?

There is probably a baseline level of curiosity driven research that will happen no matter what we do. There always has been. Many of the people who do curiosity driven research do it despite having to make huge personal sacrifices. Mostly what we need to do for them is keep other people out of their way.

But people are people, and very few can live as hermits forever. I believe that in many cases, the curiosity driven research accelerates as the curiosity-driven researchers find a community of kindred spirits in which they can share their ideas freely. When fear of being scooped and losing funding is replaced by a infinite, positive-sum game of seeing who can come up with the coolest new theories or results, the work tends to accelerate and multiply in a combinatorial way.

Where are those physical environments today? Bell Labs had one. IBM ARC had one. I feel like the Flatiron Institute has one. Where else?

In the end, funding is only part of the answer here.

Curiosity is already a very strong motivator, we just need to enable it and get out of the way. Give scientists funding without making them narrowly constrain their goals, dial down their ambition, or spend half their time writing grants. Then give them the research freedom to pursue that curiosity wherever it leads. It's not easy but it is pretty simple.

I agree with that. But having seen IBM ARC up close in person in the 1990s, my gut is that there is some critical mass of curiosity -- a threshold number of curious researchers all working in the same place -- that leads to a kind of magic you don't see when the same people are more distributed geographically.

Good point, I agree! Something important to creating the right research lab team and culture.

I'd add that Polanyi worried about the question of the integrity of the fabric of scientific knowledge. That is, he asked what was the ultimate source of a geologist's or a layperson's confidence that the state of the art knowledge in say — x-ray crystallography — had integrity. His concern was that, in science there was nothing analogous to the function of arbitrating prices in markets and between markets to 'keep people honest' in their valuations.

His answer was what he called his 'theory of overlapping neighbourhoods". It was “held by a multitude of individuals” connected in a fiduciary network, “each of whom endorses the other's opinion at second hand, by relying on the consensual chains which link him to all the others through a sequence of overlapping neighborhoods”. Polanyi would have appreciated businessman Charlie Munger’s claim that “the highest form a civilization can reach is a seamless web of deserved trust”.

We can apply an analogous process of relying more on those with a good record of shorter term prediction in making longer term predictions.

Section 6 of this report discusses the way in which one might 'bootstrap' longer-term vision by using shorter term forecasting performance as one criterion for determining longer-term forecasting prowess — together with deliberation on determining shorter run expectations that can be used to test the progress of longer term projects.

Though I don’t think he's cited (his contribution is in noting the need for such a thing rather than identifying it — because that is pretty obvious), this builds on Polanyi's overlapping neighbourhoods idea.

Thanks for the post Jason. Most or all the mechanisms proposed tend to assume that people are self-interested rather than a mix of self-and-other interest. They play to Hobbesian not Aristotelian stereotypes of the way we are. The scientist turned philosopher Michael Polanyi would argue that many of these functions have powerful ethical dimensions and I'd argue that the structures we've put in place, in appealing to self-interest tend to crowd out more ethical motivations. Bureaucracies breed careerism. So I'd want to introduce more randomisation and other kinds of mechanisms to minimise the extent to which the accountability mechanisms we set up don't just produce more accountability theatre. There are also ways of selecting for merit that don't involve people performing for their superiors in an organisation. Thus the republic of Venice used the mechanism of the Brevia by which a sub-group of the 2,000+ strong population with political power were chosen by lottery. They then convened behind closed doors and then had a secret ballot. The idea was to insulate the merit selection process from favours to power. It seems to have worked a charm helping Venice to be the only city state in Italy that got through the 500 years from the beginning of the 13th century without any successful coups or civil wars.

Hmm, I don't agree with how you are characterizing my assumptions about human nature. I'm not assuming that scientists are after money or prestige. I assume most of them, or at least the best of them, are motivated by curiosity, the desire to discover and to know, and the value of scientific knowledge for humanity.

Re accountability, I frankly think we could do with a bit less of it. Accountability is always in tension with research freedom.

Re people performing for their superiors: I actually think scientists performing for their managers would be a much healthier model than what we have today, which is scientists performing for their grant committees. I have another piece on this that I plan to publish soon.

Thanks Jason, I don't think you've understood what I was trying to get at.

OK, sorry!