One of the defining characteristics of the modern world is the ubiquity of steel. Nearly every product of industrial civilization relies on steel, either as a component or as part of the equipment used to produce it. Without it, Vaclav Smil notes in “Still the Iron Age”, modern life would largely be impossible:

…none of [civilization’s] great accomplishments - its surfeit of energy, its abundance of food, its high quality of life, its unprecedented longevity and mobility, and indeed, its electronic infatuations - would be possible without massive smelting of iron and production (and increasingly also recycling) of steel.

...The list of items and services whose reliability and affordability have been made possible by steel is nearly endless as critical components of virtually all mining, transportation and manufacturing machines and processes are made of the metal and hence a myriad of non-steel products ranging from ammonia (synthesized in large steel columns) to wooden furniture (cut by steel saws), and from plastic products (formed in steel molds) to textiles (woven on steel machines). - Vaclav Smil, Still the Iron Age

We see this in construction as well. Every modern structural system, for instance, relies on steel to function. Steel gets used for the beams and columns in structural steel framing (used in everything from 100 story skyscrapers to single-story commercial buildings), as the reinforcing in both concrete and masonry, and as the connectors – bolts, nails, screws, staples, truss plates – that hold together light framed wood and heavy timber construction. Roughly half the world’s steel produced each year is used by the construction industry, and the spread of steel into building systems is one of the main ways that modern methods of construction differ from historical ones.

Steel is ubiquitous for two reasons - it has useful material properties, and it's cheap. These facts are the result of centuries of development of steel and the technology for producing it. Let's take a look at what that looked like.

What is steel?

First, some background. Steel is the name given to a broad class of iron and carbon alloys, along with alloying elements which vary depending on the type of steel: stainless steels have added nickel and chromium, tool steels have added tungsten, and so on. In practice, essentially any iron alloy with less than 2% carbon gets referred to as steel, and most steel produced today is low-carbon "mild" steel that has such small amounts of carbon that it would have been categorized as iron in an earlier age[1]. Today there are over 3500 grades of steel which have a broad range of mechanical properties, but in general steels have both high strength (in both tension and compression) and high toughness (absorbing a lot of energy before rupturing). Steel can be produced in a wide variety of sizes, from sheets just a few fractions of an inch thick to plates many inches thick. It can be formed into virtually any shape, and can be worked using many different methods - casting, forging, welding, 3D printing, machining, hot and cold rolling, stamping, etc. It can be made hard, durable, resistant to corrosion, and can operate over a wide range of temperatures. I suspect that more effort has gone into understanding and developing steel than any other material.

While today, there are increasingly steel substitutes available (such as plastics, aluminum, titanium, and carbon fiber), these are all relatively modern developments[2] - prior to the 20th century few materials could match the performance of steel. Bret Devereaux notes that steel was the “super material” of the premodern world:

High carbon steel is dramatically harder than iron, such that a good steel blade will bite – often surprisingly deeply – into an iron blade without much damage to itself. Moreover, good steel can take fairly high energy impacts and simply bend to absorb the energy before springing back into its original shape (rather than, as with iron, having plastic deformation, where it bends, but doesn’t bend back – which is still better than breaking, but not much). And for armor, you may recall from our previous look at arrow penetration, a steel plate’s ability to resist puncture is much higher than the same plate made of iron

Despite its usefulness, steel as a widely-used material is a relatively modern development. While steel has been used by civilization for thousands of years, prior to the 1850s it was expensive to produce, and mostly limited to uses where the high cost was justified (such as edged tools). (Smil 2016).

The early history of ironmaking

(Note: for most of these processes, there were a variety of methods and variations used at different times and different locations. In lieu of exhaustingly listing them all, my focus will be on the most common ones used in Europe and the west.)

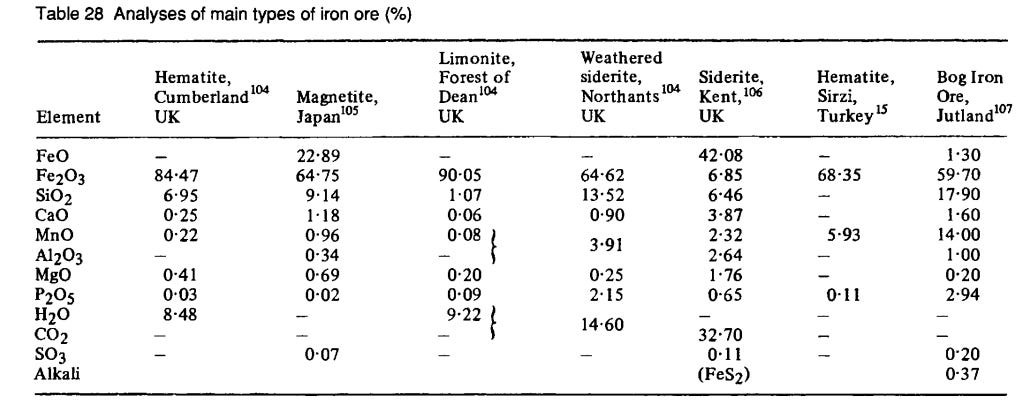

From the ancient world up until the middle ages, iron was largely produced in Europe[3] using the bloomery method. Bret Devereaux has written an extremely thorough and accessible description of this process here, but briefly: iron ore (largely hematite, Fe2O3, mixed with various impurities)[4] would be mixed with charcoal in a special furnace called a bloomery. Charcoal, in turn, was made by heating wood in an oxygen-starved environment until it underwent pyrolysis, leaving behind a mass of mostly pure carbon[5]. A fire in the bloomery would be lit and then fed with air, which would react with the charcoal to produce CO2 and release heat. As the temperature increased and the furnace became oxygen starved, carbon monoxide (CO) would start to be produced, which would in turn react with the Fe2O3 to produce metallic iron (Fe). The impurities in the iron (largely alumina and silicates) would react either with a flux such as limestone (if it was used) or iron oxide (if it wasn't) to produce slag which would melt out of the iron, leaving behind a spongy mass of metallic iron called a bloom. The bloom would then be worked with a hammer to knock out most of the remaining slag and consolidate the mass into a rectangular bar of wrought iron. The wrought iron would have a very low carbon content, and a small amount of slag distributed throughout the material, giving it a distinctive “wood grain” texture.

Getting steel could be done in (roughly) two ways. The first was to use the bloomery to produce steel directly - with a high enough charcoal/iron ratio, the iron would absorb carbon and result in a bloom of steel (it's not clear to me whether this was actually done in Europe - Tylecote and Williams suggest it was common, but Devereaux says there's no strong evidence for it).

The more common method of producing steel was via cementation - wrought iron from the bloomery would be placed in large clay chests and mixed with charcoal. The chests were then heated for a period of several days, during which the iron would slowly absorb carbon from the charcoal. Because the carbon had to migrate in from the surface, cementation was typically done using thin rods of iron. To turn the cemented rods into more usable metal, they would often be piled - repeatedly heated and then folded back on itself to get a thicker piece of (relatively) uniform steel (the resulting steel would later be called “shear steel”). Different grades of iron and steel might then be combined via pattern welding - a blade, for instance, might have a hard steel edge attached to a core of softer steel or wrought iron. All this additional process meant that steel was perhaps 4 times as expensive as wrought iron to produce, and it was thus used comparatively sparingly.

Beyond the time and effort required for the smelting and smithing, the other inputs to this process were enormous. Producing a single kilogram of iron required perhaps 6 kilograms of iron ore, and 15 kilograms of charcoal. That charcoal, in turn, required perhaps 105 kilograms of wood. Turning a kilogram of iron into steel required another 20 kilograms of charcoal (140 kilograms of wood). Altogether, it took about 250 kilograms, or just over 17 cubic feet, of wood to produce enough charcoal for 1 kilogram of steel (assuming a specific gravity of 0.5 for the wood). By comparison, the current annual production of steel in the US is roughly 86 million metric tons per year. Producing that much steel with medieval methods would require about 1.4 trillion cubic feet of lumber for charcoal, nearly 100 times the US's annual lumber production.

And turning lumber into charcoal could only be done by way of an enormous amount of labor. A skilled woodcutter could cut perhaps a cord (128 cubic feet) of wood per day. A ton of steel would thus require ~130 days of labor for chopping the wood alone. Adding in the effort required to make the charcoal (which required arranging the wood in a specially-designed pile, covering it with clay, and supervising it while it smoldered), Devereaux suggests a one-man operation might require 8-10 days to produce ~250 kilograms of charcoal (which would in turn produce ~7.2 kilograms of steel), or roughly 1000 days of labor to produce enough charcoal for a ton of steel[6]. And this might underestimate the inputs required, as getting a ton of usable steel would require more than a ton of wrought iron due to production losses - during piling, for instance, much of the steel would be lost as the heated steel reacted with oxygen in the air, forming iron oxide. And while a ton of steel sounds like a lot, it only amounts to a cube 20 inches on a side, or slightly more than the amount of steel used in a single car.

The industrialization of ironmaking

The first major step in industrializing the ironmaking process was the development of the blast furnace, which Alan Williams suggests evolved from bloomeries getting larger. As a furnace gets larger, it burns hotter - heat produced rises with the cube of furnace size (as heat is a function of fuel volume). But heat losses rise with the square of furnace size (as losses are a function of furnace surface area). Build a large enough furnace, and it would get hot enough to completely melt the iron, rather than leaving it a spongey, semisolid mass. And the higher temperature, combined with the larger size and a larger amount of charcoal, change the reactions that take place within[7]:

Different reactions will now have a chance to take place. The iron oxide will be reduced to iron earlier in its passage through the combustion zone; the iron will spend longer traveling down the shaft and will absorb more carbon, thus lowering its melting point (the melting-point of pure iron is 1550°C). At the same time, since the ambient temperature is higher, the likelihood of the iron melting will be greater. If it does become liquid, then carbon will be absorbed rapidly, to form a eutectic mixture, containing 2% of carbon, whose melting-point is 1150°C…

...Less iron oxide will be present to form slag, so its composition will be closer to calcium silicate than iron silicate, and its free-running temperature will be higher (around 1500° - 1600°C). Other elements, such as manganese (Mn) silicon (Si) and phosphorus (P) may also be reduced from their compounds. The overall efficiency of extraction will be higher, since less iron will be left unreduced in the slag. The products will be a liquid iron ("cast iron" or "pig iron"), and an almost iron-free slag. - Alan Williams, The Knight and the Blast Furnace

Because it burned hotter, a blast furnace could use a lower quality ore than a bloomery, and its larger size meant that it could produce more iron. And unlike the spongy bloom of iron produced by the bloomery, the liquid iron produced by the blast furnace could be poured into molds and cast. Because it could produce large, seamless castings, blast furnace iron was far better than wrought iron (which couldn’t be used effectively for cannons due to the seams that needed to be stitched together) for producing things like cannons. It’s likely that early blast furnaces were largely used to produce cast-iron cannons, which were superior and cheaper than bronze cannons.

The earliest blast furnaces in Europe date to the 12th century, and by the 1500s they were common, though bloomeries remained in use. Though early blast furnaces were less efficient in their fuel use than bloomeries, they eventually became much more efficient - Tylecote notes that 16th century furnaces in England required around 4-8 kilograms of charcoal for each kilogram of iron produced, compared to 10-20 kilograms of charcoal for bloomeries[8]. They also lost less iron in the form of slag. Because they still used charcoal, blast furnaces tended to be located where wood was plentiful. They were also typically located near sources of moving water that would allow a water wheel to drive the bellows. Early in the development of the blast furnace, smelters learned to add limestone to the furnace, which acted as a flux to remove the silica and alumina impurities instead of the iron[9].

However, the iron produced by the blast furnace was different from that produced by the bloomery. Whereas bloomery wrought iron was extremely low in carbon (0.2% or less), the iron direct from the blast furnace was much higher in carbon - perhaps 3-4%. The high carbon content made this iron brittle, and, unlike wrought iron, cast iron couldn’t be forged (heated and hammered into shape). Most blast furnace iron, called "pig iron," was therefore converted to wrought iron (also called malleable or bar iron) before being used.[10]

The primary method for converting blast furnace iron to wrought iron was known as the fining process. Fining methods varied in their details, but broadly, pig iron from the blast furnace was placed in a hearth, and heated with a charcoal fire stoked by bellows. It would undergo several cycles of melting and reheating, during which the carbon (and most of the other impurities) would be removed. The fined iron would then be heated and worked via a tilt-hammer which knocked out most of the slag, resulting in low-carbon wrought iron. (In some cases, this working was done in a separate forge, called a chafery, which ran at a lower temperature and could use coal as fuel instead of charcoal). Like the bloomery, the finery process could ostensibly produce steel as well, but through the 1700s most steel in Europe was still produced via the slow and expensive cementation process.

Charcoal to coke

Over time, blast furnaces got taller, larger, and more efficient per unit of iron they produced, but they still required large amounts of charcoal and thus large amounts of wood. At the end of the 17th century, English blast furnaces were using about 40 kilograms of wood for each kilogram of pig iron. Obtaining sufficient wood to make charcoal was difficult - residents in areas around ironworks often objected to the ironworks consuming so much of the available wood, in some cases petitioning the king to intervene. And furnaces often had to shut down due to lack of available fuel. This was exacerbated by the fact that during the conversion from pig iron to wrought iron, roughly a third of the pig iron would be lost.

Beyond the volume of wood it required, charcoal had other limitations. It was brittle, limiting how high it could be piled in the furnace and thus how much iron could be smelted at once. And its brittleness also meant it couldn't be transported very far from where it was made.

The most obvious replacement for charcoal in the ironmaking process was coal. Like wood, coal can be transformed by pyrolysis, resulting in coke, a mass of nearly pure carbon. The first successful use of coke in the blast furnace dates to the early 1700s, and the efforts of Abraham Darby. Darby's goal was to produce cast-iron pots more cheaply than conventional methods. At the time, pots were one of the most difficult things to cast - the pots would have to be either cast with thick walls (making them more expensive), or cast in heated clay molds (which was an expensive casting process.) Darby, however, believed that using coke would let him cast thin-walled pots using a cheaper, cold molding process called the green sand process.

When cast in a mold, the color of cast iron would be somewhere between white and grey. White iron was harder, but extremely brittle, and couldn't be cut or filed. Grey iron was less brittle and could be more easily cut or machined. Grey iron was better for casting, but was difficult to convert into wrought iron, whereas white iron converted much more easily. The difference in color comes from the different microstructure of the irons, which in turn results from how the iron is cooled and its chemical composition. If iron cools too quickly, it forms white iron, which wasn’t suitable for pots - casting thin-walled pots thus required heated molds, otherwise the iron would cool too quickly.

But whether cast iron was grey or white was also a function of the carbon equivalent of the iron (a metric consisting of the carbon percentage plus 1/3rd the silicon percentage plus 1/3rd the phosphorus percentage). Iron with a higher carbon equivalent could cool more quickly and still form grey iron. Pig iron produced in a coke-fueled blast furnace tended to have a higher silicon content, and thus a higher carbon equivalent, than charcoal-produced pig iron. Thus by using coke in his blast furnace, Darby could produce thin-walled pots using a much cheaper molding process.

Darby was successfully able to produce thin-walled pots with coke-smelted iron, but coke didn’t immediately displace charcoal as a blast furnace fuel. Coke-fired blast furnaces were not any cheaper to operate than charcoal-fired ones, and the use of coke resulted in sulfur impurities in the iron, which, while not a problem for castings, made the iron unsuitable for conversion to wrought iron. As of 1750 only 10% of the pig iron produced in Britain was from coke-fired furnaces.

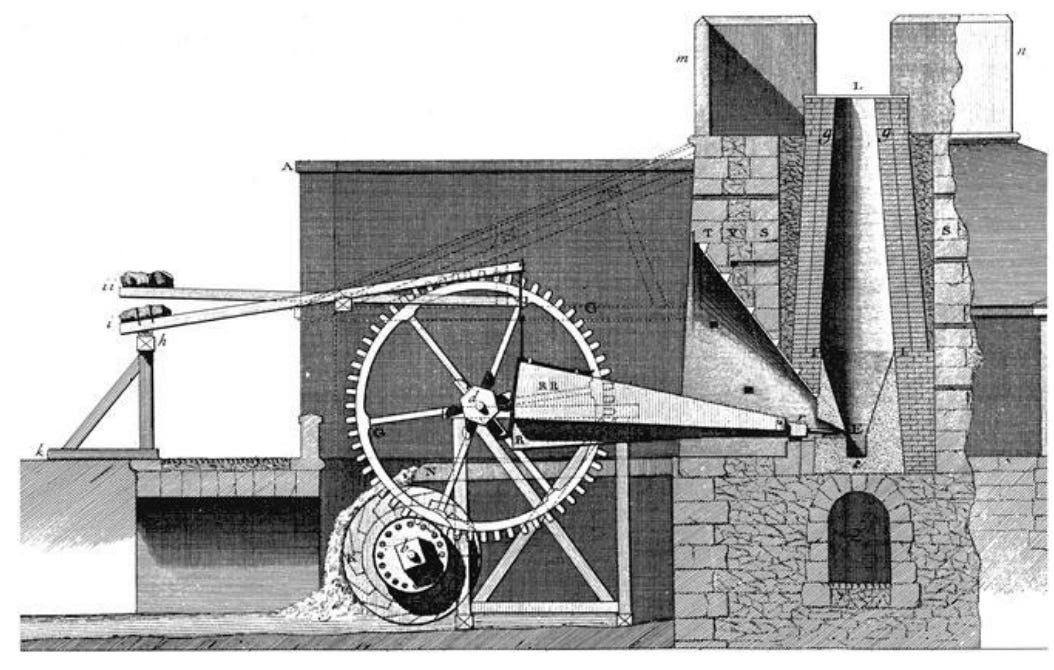

But coke could be piled higher in a blast furnace without being crushed, enabling larger furnaces. As Darby's son (Darby II) built larger blast furnaces (using a Newcomen engine to raise water to drive a waterwheel), the increased heat caused the excess sulfur to be removed, making the iron suitable for conversion to wrought. Coke-smelting took off in Britain in the 1750s, and by 1788 almost 80% of pig iron in Britain was produced in coke-fired furnaces. Outside of Britain, however, using coke took much longer to spread. In 1854, nearly half the iron in the US and France was still produced using charcoal. Coke-fired blast furnaces didn’t appear in Italy until 1899.

Removing the charcoal constraint allowed iron output to greatly increase. In 1720, about 20,000 tons of cast iron were produced in Britain (most of which was converted to wrought iron), nearly all of which was produced using charcoal. By 1806, that had risen to more than 250,000 tons, nearly all of which was produced using coke.

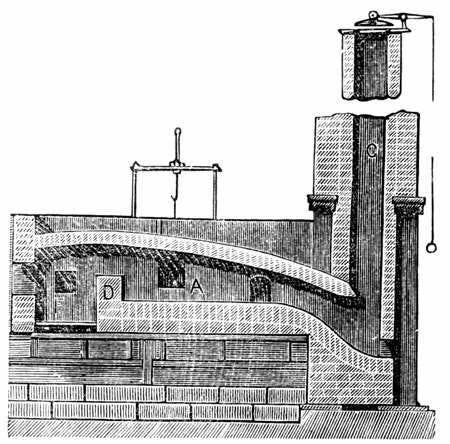

However, converting pig iron to wrought iron still required the use of charcoal. This was solved by the puddling process, invented by Henry Cort in 1784[11]. In the puddling process, pig iron would be placed in a coal-fired reverberatory furnace, which separated the fuel from the iron by a low wall. Ironworkers (called puddlers) would constantly stir the pig iron to expose it to oxygen, and the flow of air over the iron would decarburize it.

Cort also improved the method of turning the wrought iron into bars, which had previously been done using tilt-hammers. Cort replaced these with grooved rollers, which were far more efficient. Grooved rollers could produce 15 times as many iron bars as a tilt hammer could in the same period.

Though Cort’s puddling process was an improvement over what came before, it was slow, and lost huge amounts of iron during the conversion process - in some cases 50% of the pig iron would be lost as slag. The culprit was the sand bottom of the furnace - when the iron was heated, part of it formed an iron oxide (Fe3O4), which reacted with the silicon in the sand to produce a slag.

Other improvements to iron and steelmaking

Over the next several decades the blast furnace and puddling furnace were further improved. The sand-bottom in the puddling furnace was replaced by an iron-oxide bottom (developed by Joseph Hall) which greatly reduced losses during the conversion process[12]. The blast furnace was improved by the development of the "hot blast" by James Neilson in 1828, where the furnace would be fed using air preheated by burning coal, which greatly reduced fuel consumption (Smil 2016). Both puddling furnaces and blast furnaces were further improved by technologies which could recycle the heat from waste gasses, such as the Rastrick boiler in 1827 and the Cowper regenerative stove in 1857.

Efficiency improvements to the steelmaking process, however, were much more limited. The main advance was Huntsman's development of "crucible steel" in the early 1740s. Steel from the cementation process (called "blister steel" on account of its swelling during cementation) would be placed in a crucible and heated by a coke furnace until it completely melted. It would then be cast into molds, and then forged into bars for use. Crucible steel eliminated the tedious process of piling, and because the steel was fully melted it was a higher quality, more uniform product than blister steel.

Additionally, by varying the composition of the steel in the crucible, different types of steel could be produced. Crucible steel became the metal of choice for components requiring the highest quality steel such as "razors, cutlery, watch springs, and metal-cutting tools", and the process continued to be used to produce high-quality steel well into the 20th century (when it was replaced by the electric arc furnace).

However, crucible steel required cemented blister steel as an input, and steel remained expensive and limited in use. In the 1700s steel was still 3 times as expensive as wrought iron, and as of 1835 Britain produced perhaps 20,000 tons of blister steel a year, compared to about 1 million tons of pig iron. Similarly, as of 1860 America produced just 13,000 tons of steel against nearly 920,000 tons of pig iron. Large scale production of steel would have to wait for the work of Henry Bessemer.

Sources

Books (roughly in order of importance)

R.F. Tylecote, A History of Metallurgy

Vaclav Smil, Still the Iron Age

Carnegie Steel, The Making, Shaping and Treating of Steel (Also later editions by US Steel)

Alan Williams, The Knight and the Blast Furnace

Thomas Southcliffe Ashton, Iron and Steel in the Industrial Revolution

Hosford, Iron and Steel

R.A. Mott, Henry Cort, The Great Finer

Robert Rogers, and Economic History of the American Steel Industry

Peter Temin, Iron and Steel in Nineteenth-Century America: An Economic Inquiry

Alan Birch, The economic history of the British iron and steel industry, 1784-1879

Evans and Ryden, The industrial revolution in iron

Papers and other sources (roughly in order of importance)

Bret Devereaux, Iron, How Did They Make It?

Richard Williams, A question of grey or white: Why Abraham Darby I chose to smelt iron with coke

W.K.V. Gale, Wrought Iron: A Valediction

Richard Williams, The performance of Abraham Darby I’s coke furnace revisited, part 1: temperature of operation

Richard Williams, The performance of Abraham Darby I’s coke furnace revisited, part 2: output and efficiency

H.R. Schubert, Early Refining of Pig Iron in England

Cyril Smith, The Discovery of Carbon in Steel

Flemings and Ragone, Puddling: A metallurgical perspective

Robert Walker, The Production, Microstructure, and Properties of Wrought Iron

- ^

For instance, here’s Hosford 2012:

The word steel is used to describe almost all alloys of iron. It is often said that steel is an alloy of iron and carbon. However, many steels contain almost no carbon. Carbon contents of some steels are as low as 0.002% by weight. The most widely used steels are low-carbon steels that have less than 0.06% carbon.

Likewise, Tylecote 2002 defines mild steel as “Modem equivalent of wrought iron but without the slag which gives the latter its fibrous structure.”

As we'll see, the definition of steel has been somewhat flexible over time. Historically, before its chemical makeup was understood, steel was defined as a type of iron that could be hardened by quenching (Carnegie 1920). As its chemical makeup was understood, it began to be defined as iron with between ~0.2% and ~2% carbon. Later, it was defined, roughly, as "whatever gets produced by the open hearth or bessemer processes". (Misa 1995)

- ^

Aluminum didn’t become economical to use until the development of the Hall-Heroult process in the late 1800s, and remains more expensive than steel. As of this writing steel is about $750/ton for hot rolled steel band and $1675/ton for steel plate, compared to more than $2300/ton for aluminum.

Polyethylene wasn’t commercially produced until 1939, polyvinyl chloride was discovered in the late 1800s but wasn’t successfully used until 1926, and polypropylene wasn’t produced until 1951. Fiberglass wasn’t produced until the 1930s.

Titanium wasn’t produced until 1910, and an economic process (the Kroll process) didn’t appear until 1940, and it remains extremely expensive (Tylecote 2002).

- ^

Places like China and India had different methods of iron and steel production.

- ^

Compositions of various iron ores from Tylecote 2002:

- ^

This carbon serves three purposes: it acts as fuel for the furnace, it reacts with the oxygen so the metal can be smelted, and it dissolves in the iron itself to produce steel. These three roles made it difficult to suss out the role of carbon in the ironmaking process, and it wasn't until the late 1700s and the chemical revolution that the role of carbon in steel was understood.

- ^

Though this assumes no economies of scale for a larger operation.

- ^

For a more detailed look at the reactions that take place in a modern blast furnace, see this diagram from “The Making, Shaping, and Treating of Steel”:

- ^

16th century blast furnaces still required about 6 tons of ore per ton of steel, but by the 20th century, blast furnaces were using just 2 tons of ore per ton of pig iron. (Tylecote 2002, Carnegie 1920).

- ^

This seems like it should have increased the yield per ton of ore, and indeed Williams and Tylecote state that blast furnaces were more efficient in ore use. But the amount of iron yielded per ton of ore in a 16th century blast furnace was similar to the amount Devereaux gives for the yield of a bloomery. This may be because blast furnaces used lower quality ores with a smaller fraction of iron.

- ^

Confusingly, there would later be a product called “malleable cast iron” (which sometimes gets shortened to “malleable iron”) - cast iron that had been treated to be more malleable. “The Making, Shaping, and Treatment of Steel” describes the process for making it:

Malleable cast iron is…obtained from crude pig iron of a certain composition chemically, which, upon being cast into the desired form, is subsequently subjected to a combined annealing and oxidizing process by which the malleability is developed. In carrying out the process, the clean casting is packed in iron oxide and subjected to temperature of about 700 for three or more days, which it is allowed to cool in the furnace very slowly. By this treatment the greater portion of the combined carbon is converted into graphite that takes the form of very minute particles evenly distributed throughout the casting, and so does not have the weakening effect that flakes of graphite have.

- ^

Cort, in turn, built on earlier work of folks like Peter Onions and the Cranage brothers (Tylecote 2002).

- ^

The iron oxide bottom was actually a major innovation that fundamentally changed the chemical reactions of the process. See Fleming and Ragone.