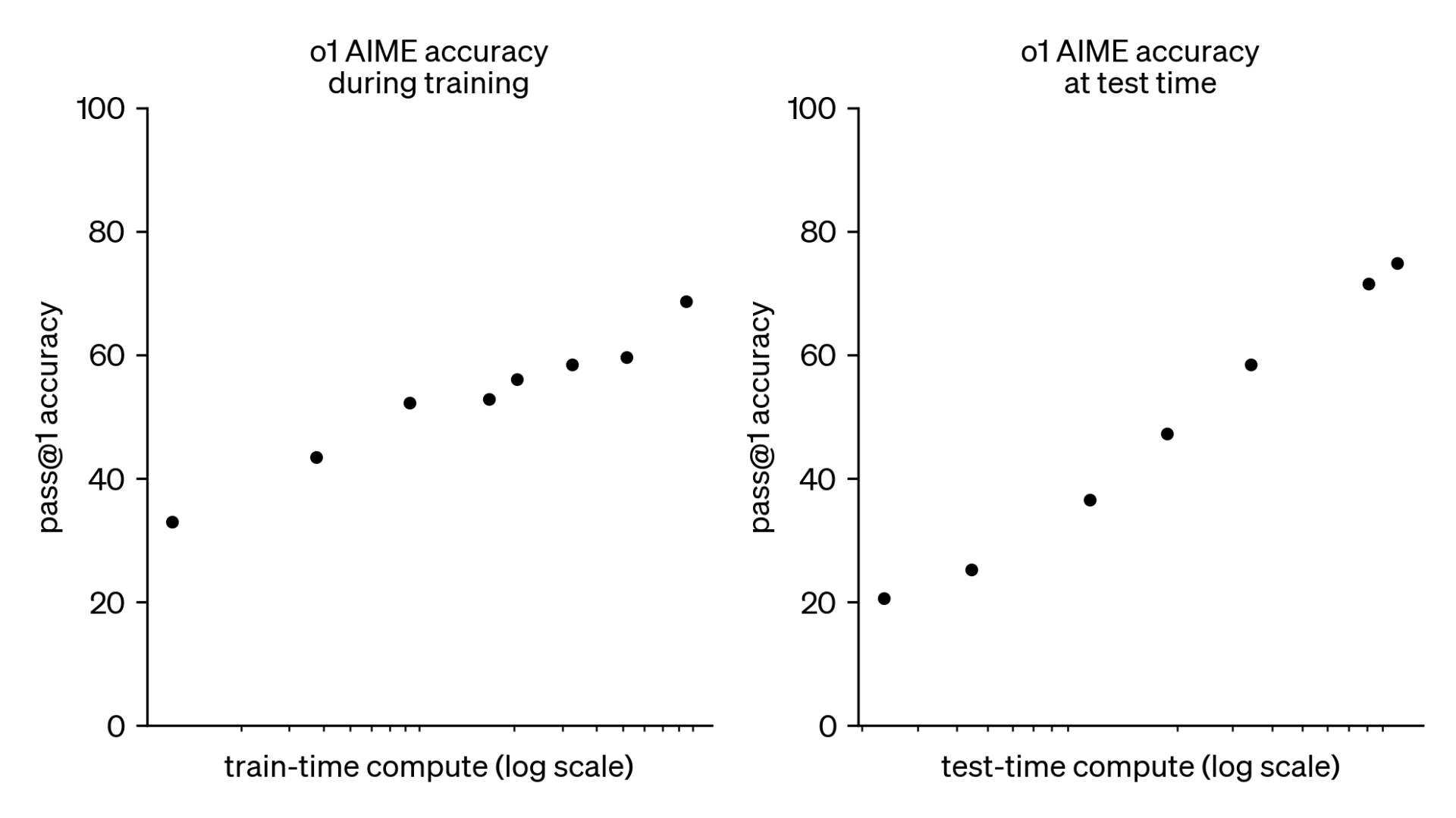

A few minutes ago, OpenAI's o1 was announced. It's easy to miss the massive (terrifying?) implications of the right hand graph ("test-time compute"):

We've built a cultural cadence of re-adjusting our sense of what technology can do every time there's a new announcement, most recently with regard to LLMs. They could write poems, then a few weeks later a new model could autocomplete a line of code, then by winter they could write a whole Python function. Each time there were some headlines and everyone went to try it and develop a consensus.

Now, by throwing more "test time" compute, the upper bound is... unbounded. That means that when I mess around with it over the next 72 hours, I'm *not* going to be able to understand its full potential if given 100x time to think. And it will be easy to believe that its power, for positive and negative, is roughly what we'll see when it's applied to various toy examples.

Having tried to push GPT-4 through complex, high value problems, I know that the value of additional time falls to zero pretty quickly. It just can't get there, in the same way an dog just can't do calculus. But what if it could? What if, for things that really mattered, the model we currently have can run for not 10, not 100, but 100,000 seconds (1.2 days)? Sounds expensive, but for some applications it will be worth it.

What's scary is that we won't get "used to it", this expanded power, with our usual toy prompts. Nobody records 26 hour long Youtube tutorials. But for a small number of the most valuable segments of our personal and civic lives, that massively scaled computational output will drive the world we live in, compete in, and try to protect our digital devices in.

The right next step is still to try it out. But I'd encourage you to push it further than feels right. Rack up $7 in API charges for a single prompt, on occasion. And really keep an open mind about how fast the ceiling is rising.