Over at Medium, I just posted an essay on "The Proper Governance Default for AI." It's a draft of a section of a big forthcoming study on “A Flexible Governance Framework for Artificial Intelligence,” which I hope to complete shortly.

https://link.medium.com/nGZWnEtNlqb

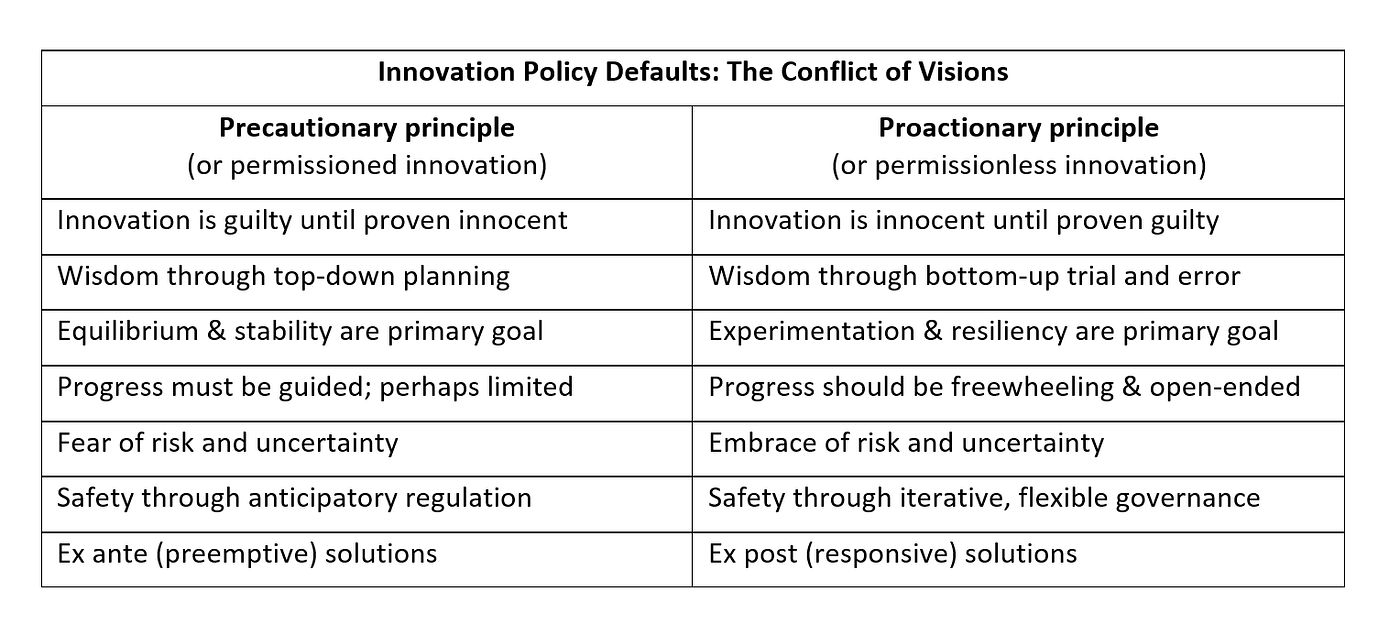

I note that, to the extent AI design becomes the subject of legal or regulatory decision-making, a choice must be made between two general approaches: "the precautionary principle" or "the proactionary principle." While there are many hybrid governance approaches in between these two poles, the crucial issue is whether the initial legal default for AI technologies will be set closer to the red light of the precautionary principle (i.e., permissioned innovation) or to the green light of the proactionary principle (i.e., (permissionless innovation).

I'd welcome any thoughts folks might have. I am currently serving as one of 11 Commissioners on the U.S. Chamber of Commerce AI Commission on Competition, Inclusion, and Innovation, where we are debating the policy issues surrounding artificial intelligence and machine learning technologies.

One thing to keep in mind is the potential for technologies to be hacked. I think widespread self-driving cars would be amazingly convenient, but also terrifying as companies allow them to be updated over the air. Even though the chance of a hacking attack at any particular instance of time is low, given a long enough time span and enough companies it's practically inevitable. When it comes to these kind of widescale risks, a precautionary approach seems viable, when it comes to smaller and more management risks a more proactionary approach makes sense.