You’ve probably seen several conversations on X go something like this:

Michael Doomer ⏸️: Advanced AI can help anyone make bioweapons

If this technology spreads it will only take one crazy person to destroy the world!Edward Acc ⏩: I can just ask my AI to make a vaccine

Yann LeCun: My good AI will take down your rogue AI

The disagreement here hinges on whether a technology will enable offense (bioweapons) more than defense (vaccines). Predictions of the “offense-defense balance” of future technologies, especially AI, are central in debates about techno-optimism and existential risk.

Most of these predictions rely on intuitions about how technologies like cheap biotech, drones, and digital agents would affect the ease of attacking or protecting resources. It is hard to imagine a world with AI agents searching for software vulnerabilities and autonomous drones attacking military targets without imagining a massive shift the offense defense balance.

But there is little historical evidence for large changes in the offense defense balance, even in response to technological revolutions.

Consider cybersecurity. Moore’s law has taken us through seven orders of magnitude reduction in the cost of compute since the 70s. There were massive changes in the form and economic uses for computer technology along with the increase in raw compute power: Encryption, the internet, e-commerce, social media and smartphones.

The usual offense-defense balance story predicts that big changes to technologies like this should have big effects on the offense defense balance. If you had told people in the 1970s that in 2020 terrorist groups and lone psychopaths could access more computing power than IBM had ever produced at the time from their pocket, what would they have predicted about the offense defense balance of cybersecurity?

Contrary to their likely prediction, the offense-defense balance in cybersecurity seems stable. Cyberattacks have not been snuffed out but neither have they taken over the world. All major nations have defensive and offensive cybersecurity teams but no one has gained a decisive advantage. Computers still sometimes get viruses or ransomware, but they haven’t grown to endanger a large percent of the GDP of the internet. The US military budget for cybersecurity has increased by about 4% a year every year from 1980-2020, which is faster than GDP growth, but in line with GDP growth plus the growing fraction of GDP that’s on the internet.

This stability through several previous technological revolutions raises the burden of proof for why the offense defense balance of cybersecurity should be expected to change radically after the next one.

The stability of the offense-defense balance isn’t specific to cybersecurity. The graph below shows the per capita rate of death in war from 1400 to 2013. This graph contains all of humanity’s major technological revolutions. There is lots of variance from year to year but almost zero long run trend.

Does anyone have a theory of the offense-defense balance which can explain why the per-capita deaths from war should be about the same in 1640 when people are fighting with swords and horses as in 1940 when they are fighting with airstrikes and tanks?

It is very difficult to explain the variation in this graph with variation in technology. Per-capita deaths in conflict is noisy and cyclic while the progress in technology is relatively smooth and monotonic.

No previous technology has changed the frequency or cost of conflict enough to move this metric far beyond the maximum and minimum range that was already set 1400-1650. Again the burden of proof is raised for why we should expect AI to be different.

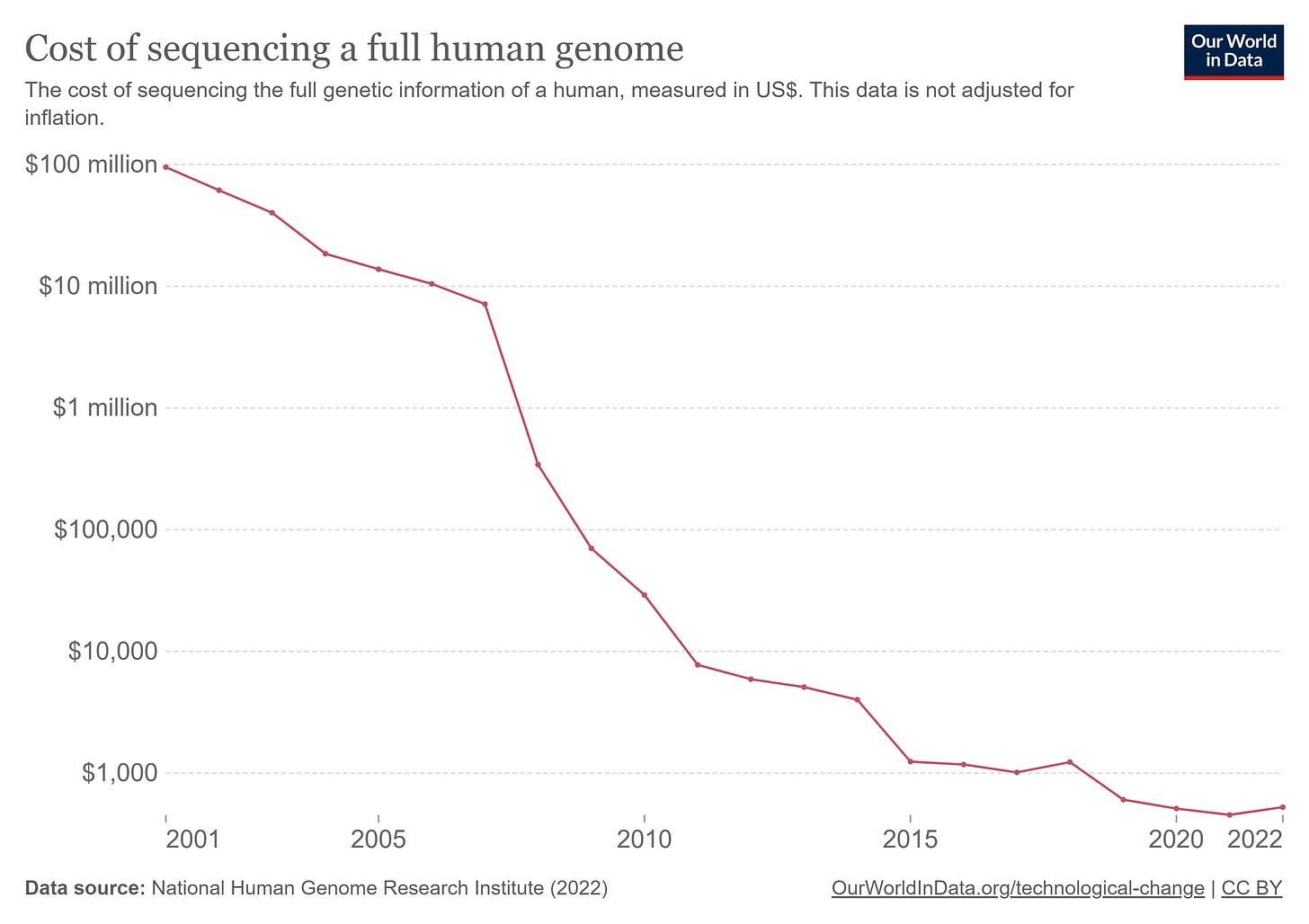

The cost to sequence a human genome has also fallen by 6 orders of magnitude and dozens of big technological changes in biology have happened along with it. Yet there has been no noticeable response in the frequency or damage of biological attacks.

Possible Reasons For Stability

Why is the offense-defense balance so stable even when the technologies behind it are rapidly and radically changing? The main contribution of this post is just to support the importance of this question with empirical evidence, but here is an underdeveloped theory.

The main thing is that the clean distinction between attackers and defenders in the theory of the offense-defense balance does not exist in practice. All attackers are also defenders and vice-versa. Invader countries have to defend their conquests and hackers need to have strong information security.

So if there is some technology which makes invading easier than defending or info-sec easier than hacking, it might not change the balance of power much because each actor needs to do both. If offense and defense are complements instead of substitutes then the balance between them isn’t as important.

What does this argument predict for the future of AI? It does not predict that the future will be very similar to today. Even though the offense defense balance in cybersecurity is pretty similar today as in the 1970s, there have been massive changes in technology and society since then. AI is clearly the defining technology of this century.

But it does predict that the big changes from AI won’t come from huge upsets to the offense-defense balance. The changes will look more like the industrial revolution and less like small terrorist groups being empowered to take down the internet or destroy entire countries.

Maybe in all of these cases there are threshold effects waiting around the corner or AI is just completely different from all of our past technological revolutions but that’s a claim that needs a lot of evidence to be proven. So far, the offense-defense balance seems to be very stable through large technological change and we should expect that to continue.

Well

>Invader countries have to defend their conquests and hackers need to have strong information security.

One place where offense went way ahead of defense is with nukes.

However nukes are sufficiently hard to make that only a few big powers have them. Hence balance of power MAD.

If destruction is easy enough, someone will do it.

In the war example, as weapon lethality went up, the fighters moved further apart. So long as both sides have similar weapons and tactics, there exists some range at which you aren't so close as to be instakilled, nor are you so far as to have no hope of attacking. This balance doesn't apply to civilian casualties.

It isn't clear that the offense-defense balance directly affects the number of deaths in a conflict in the way that you claim. For example, machine guns nests benefitted the defenders significantly, but could quite easily have resulted in there being more deaths in warfare, due to the use of tactics that hadn't yet accounted for them.

I don't know why you'd think that compute would be the limiting factor here. Absent AI, there are limited ways in which to deploy more compute.