Last week’s episode explored common traits of complex & adaptive systems, mostly using examples from the natural world.

Hardliners of the clockwork worldview might argue that humans or human-made systems are different. We are not ants or trees. We may have some traits in common with complex systems, but we have become conscious of our ability to think. Certainly, this awareness of ourselves and our environment makes us capable of planning and control?

Our mind is capable of great things, but human intelligence and consciousness do not reduce the complexity of emerging human behavior. Rather to the contrary. Interactions between simple components like ants can lead to complex behavior. When conscious agents interact, the result is not less complex but more so. In ants, the emergent behavior is a living bridge. In humans, it is technology, civilization, and social media.

What is it about technology that increases volatility, uncertainty, complexity, and ambiguity (the VUCA acronym that has become a coping mechanism in managerial circles)?

In episode 03, I argued that digital disruption - specifically the ability to update software over the air - led to the displacement of the age of mass production. But digital disruption can be felt far outside of commerce.

One of the main reasons why information technology has made our society more complex is because it increases the number of interactions between participants in society. Social media and mobile have made us more interconnected and shaped the analog world in ways nobody predicted.

We have only recently started to recognize the effects of social media and smartphones on our biological makeup. Consider the notifications you receive on your phone. When we receive likes or texts, our brain releases dopamine into our neural system. Dopamine is the same neural transmitter that is released after eating or having sex. This molecule evolved to make us feel good when we meet a survival need.

Likes and text messages are not essential for our survival, but they do take advantage of a hardwired need for social connection. In other words, we have collectively opted into a technology that lets software jack into the pleasure center of our brains. As it turns out, there are downsides to giving away root access to our behavioral operating system.

At the outset, social media was a seemingly innocuous innovation. When Facebook was created as a network to connect friends, nobody would have guessed that liking and poking friends could have geopolitical ramifications like the Cambridge Analytica scandal or the Russian interference in the US elections.

How did we go from Farmville to MAGA in one decade?

As a rule, the Internet disintermediates. Producers and consumers have found new ways to connect to each other, thereby creating existential problems for gatekeepers, brokers, and mediators. With fewer middlemen, there is less moderation and more variance. The same is happening in the media sector:

The baby boom generation had movies and 30-minute sitcoms. The XYZ Internet generations have those, plus YouTube channels, Netflix binge-watching, 5-second Tiktoks, and more.

Before the Internet, there were only a few sources of truth. A handful of newspapers and television controlled the narrative. Today, distribution is decentralizing. Everyone and their dog has a megaphone through Twitter, Substack, Reddit, etc.

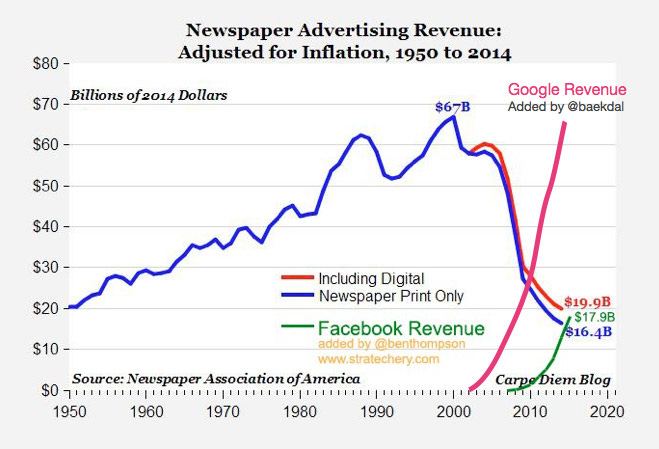

Tech is eating the media's lunch, in terms of eyeballs and in terms of advertising revenue:

The battle over control of the media is one of the most important stories of this decade. Human behavior is governed by narratives in the same way as ant behavior is directed by pheromones. For most of history, humans used stories to signal information to each other. In a connected world, it’s no longer the actual story that matters, but rather the story that people tell about the story. This is called a narrative, and it is in the fires of media that narratives are forged.

For an example from current events, look at the story that is unfolding on Twitter. The events matter, but how they are framed and folded into a narrative is even more important. There is a mainstream frame, and then there are more contrarian takes. You be the judge.

Midjourney: A newspaper printed in the fires of Mount Doom

(For a deep dive into the importance of narratives, I recommend Ben Hunt’s Epsilon Theory.)

The disintermediation of narratives leads to an epistemic dilemma. How can society find consensus around what is true in an environment that defies moderation and control? To understand this dilemma, we need to investigate emergent properties of the social media ecosystem, like echo chambers. People inside an echo chamber have become immune to any ideas or arguments that invalidate their belief system:

Look under the hood of search engines and social media platforms, and you’ll find machine-learning algorithms that are mostly black boxes. While they may have been designed with seemingly good intentions - engagement and personalization - the output of the system can result in unintended consequences.

For example, the same search prompt can generate different results for different people, depending on what the algorithm thinks the user wants to see. Seen in this light, the Internet is not a window into the world, but a mirror that feeds us back our own biases.

Midjourney: A high-resolution, colorful network graph of filter bubbles

The resulting filter bubble adds to the echo chamber effect. As people get into the habit of reading sources that confirm their pre-existing beliefs, they also start to self-select into groups with like-minded people. It is tempting to dismiss echo chambers as distant corners of the Internet, populated by flat-earthers and 9/11 truthers, but all media consumers are affected by this trend.

This very newsletter is a tiny blip in a small cluster of system-thinking nerds, attempting to reach people in a Taylorist galaxy, far, far away. Feel free to do your part in the culture war by forwarding this newsletter to your friends in bureaucratic organizations:

As if filter bubbles and echo chambers weren’t bad enough, media companies - not just tech companies - are competing for attention and have the incentive to optimize for engagement. Since people are more likely to share content that makes them angry or upset, this results in a bias for outrage and emotion, adding to the volatility of our social climate. In summary, the current media paradigm creates echo chambers while pitting their inhabitants against each other.

I’m afraid I have more bad news. There is serious research by serious people that suggests that civilizations can collapse when they are overwhelmed by the amount of information they create. This is called the Information scaling threshold:

The internet has massively increased the complexity of our information environment, but hasn’t yet produced the tools to make sense of it. Old forms of social sensemaking—institutions, universities, democracy, tradition—all seem to be DDOS’d by the new information environment.

…

The timing here seems unfortunate. We’re facing planetary challenges: climate change, global pandemics, mass extinctions, increasing geopolitical tension, financial crises, looming nuclear threats… What a time to be hitting the information scaling threshold!

But, then, maybe the information scaling threshold is why we’re experiencing these crises in the first place? As our problems get more complex, our ability to meaningfully coordinate breaks down.

Exploring the tools to make sense of this complex environment is the raison d’être of this publication. The Internet genie is out of the bottle, and there’s no going back. Instead of reverting to reductionist, linear, and deterministic tactics, we need to find a way to leverage these complex systems to our advantage.

Next week I’ll explore one such sense-making tool: systems thinking.